A professional SEO audit reveals quick wins that drive results and outlines a path to SEO success.

Yet, so many SEOs struggle to audit a site to deliver immediate results or instill long-term confidence in the SEO program.

To help overcome the issue, I created a simple, easy-to-follow SEO audit template that lists every check you should perform to drive search performance.

Open the SEO audit template and follow along for this thorough checklist designed to drive better search visibility and ROI.

The advice you’ll read below comes from my over a decade of experience helping enterprise-level SEOs drive results. No fluff or theory-based information included.

You'll find that this checklist provides the full scope of an SEO site audit, not just bits and pieces. For a beginner's guide to technical SEO issues, I recommend: 15 Common Technical SEO Issues and How to Fix Them.

Table of Contents:

- Robots.txt file

- XML Sitemap

- HTTPS/SSL Encryption

- Mobile Friendliness

- Page Speed

- SEO Tags in the Head Section

- Crawling

- Rendering

- Indexing

- On-Page Optimization

- Relevance

- Structured Data

- Faceted Navigation

- Accessibility

- Authority

- Off-Page SEO

How to Execute This SEO Audit

Although not necessarily required, a SEO site crawler will help you conduct a comprehensive site audit.

As you run your crawl through the audit process (and follow along with our checklist!), you’ll gather a list of technical issues. Give each step a grade of pass, fail, or needs improvement.

- Fail issues are a big deal. If fixed, they can result in huge wins!

- Pass means the site meets the standards outlined by Google. It’s not a factor holding back the SEO performance.

- Needs Improvement is for where the issue is not necessarily a detriment but you’re being outpaced by the competition.

Doing so will help you create a prioritized list of issues to tackle in order to improve your organic traffic — starting with the fail issues, followed by the most promising needs improvement issues based on your time and resources.

There are plenty of site audit and crawler tools on the market, and in this post I’ll be demonstrating with one of the best: seoClarity’s built-in site crawler technology.

It’s been battle-tested on a site with more than 48 million pages, and gives you full access to find SEO issues that plague your site with no artificial limits. That’s no limits on crawl depth, speed, pages crawled…

Let's get started!

The Ultimate SEO Site Audit Checklist

The following SEO site audit checklist has been split into two main focus areas:

- Technical SEO

- Content

Focus Area: Technical SEO

Technical SEO is crucial because it ensures that a website meets the technical requirements of modern search engines.

Starting your SEO audit with technical aspects is essential as it lays the foundational groundwork, allowing your content and on-page strategies to perform effectively.

By addressing technical deficiencies first, you ensure that search engines can efficiently index and rank your site, maximizing visibility and traffic.

#1. Robots.txt file

Before crawling a site, a search engine bot views the robots.txt file which gives directions on how to crawl (or not crawl) the website.

It contains instructions about folders or pages to omit as well as other critical instructions. As a best practice, it should also link to the XML sitemap so the bot can find a list of the most important URLs.

AI Search Note: If bots can’t crawl your pages, AI systems can’t learn from or reference your content. Always double-check your crawl permissions.

How to Audit

You can view the file manually by going to mydomain.com/robots.txt (replace “mydomain” with your site’s URL, of course). Look for commands that might be limiting or even preventing site crawling.

If you have access to an SEO tool to crawl the site, let it loose on the site, and be sure to set the user agent to follow instructions given to Googlebot.

This way, if you’re blocking Googlebot via the robots.txt file, the data from the crawl will reflect that with a “403 Forbidden” status code for the URL instead of a “200 OK” status code and the information for the URL.

Google Search Console historically reports URLs where Googlebot is being blocked. seoClarity users can find this in the advanced settings in a Clarity Audits crawl.

Robots.txt Recommendations

- A robots.txt file must be placed in a website’s top-level directory. Note: the file is case sensitive and must be named “robots.txt” (not Robots.txt, robots.TXT, or otherwise).

- Some user agents (robots) may choose to ignore your robots.txt file. This is especially common with more nefarious crawlers like malware robots or email address scrapers.

- Don’t use robots.txt files to hide private information. The /robots.txt file is publicly available: just add /robots.txt to the end of any root domain to see that website’s directives (if that site has a robots.txt file!). This means that anyone can see what pages you do or don’t want to be crawled.

- Each subdomain on a root domain uses separate robots.txt files. This means that both blog.example.com and example.com should have their own robots.txt files (at blog.example.com/robots.txt and example.com/robots.txt).

- It’s generally best practice to indicate the location of any sitemaps associated with this domain at the bottom of the robots.txt file. Here’s an example: https://www.seoclarity.net/robots.txt.

Common Mistakes with Robots.txt

Mistakes with Robots.txt files can result in significant technical SEO challenges. As such, it's important to be aware of the most common ones so that you can avoid them.

Some of the most prevalent Robots.txt issues include:

-

Using default CMS robots.txt Files

-

Using Robots.txt to Noindex pages

-

Using the wrong case

-

Blocking essential files

-

Using absolute URLs

- Blocking removed pages

-

Moving staging or development site’s Robots.txt to the live site

The list goes on.

For a more comprehensive and in-depth list, check out our blog on common Robots.txt mistakes and how to avoid them.

#2. XML Sitemap

A sitemap contains the list of all pages on the site. Search engines use it to bypass the site’s structure and find the URLs directly.

AI Search Note: A clean sitemap helps AI crawlers find your most authoritative pages faster, improving visibility in AI-generated summaries. It also uses the sitemap for data on updates or revisions of a page it might need.

Recommended Reading: How to Create an XML Sitemap

How to Audit an XML Sitemap

Your sitemap should reside in the root folder on the server. The most common place to find it directly is at mydomain.com/sitemap.xml or linked to/from the robots.txt file. Otherwise, the content management system (CMS) may show the URL if there is one.

Crawl the sitemap URLs to make sure they are free of errors, re-directs, and non-canonical URLs (e.g. URLs that have canonical tags to another URL). Submit your XML sitemaps in Google Search Console and investigate any URLs that are not indexed. They’ll likely have an error, re-direct, or non-canonical URL!

XML Sitemap Recommendations

- Automate your CMS to update XML sitemaps with each new content piece, or use tools like seoClarity to generate sitemaps by crawling your site.

- Ensure your XML sitemap adheres to the sitemap protocol required by search engines for correct processing.

- Submit your sitemap to Google Search Console to validate the code and rectify any errors. Exclude redirect URLs, blocked URLs, and URLs with canonical tags pointing elsewhere.

- Enhance your sitemap with additional details such as images, hreflang tags, and videos.

- Limit sitemaps to 50,000 URLs each. If you exceed this, create a sitemap index linking to multiple sitemaps.

- Organize sitemaps by page type (e.g., product pages, blog pages, category pages) and submit each to Google Search Console for monitoring and error checking through regular crawls.

Common Mistakes with XML Sitemaps

- Containing URLs that redirect, return an error (404), canonical to a different URL, or are blocked. Make sure all sitemap URLs are a 200 OK status.

- Also through site updates, the XML sitemap may become detached from the CMS so new pages cease to be included or old pages not removed.

#3. HTTPS/SSL Encryption

SSL encryption establishes a secure connection between the browser and the server. Google Chrome marks secure sites (those having an active SSL certificate) with a padlock image in the address bar.

Recommended Reading: HTTP vs HTTPS: What’s The Difference and Why Should You Care?

It also warns users when they try to access an insecure site.

Most importantly, though, Google also uses the HTTPS encryption as a ranking signal.

AI Search Note: Security signals still matter. AI-generated results often prioritize sources with clear trust indicators.

How to Audit HTTPS

Visit the site in Chrome and look at the address bar. Look for the padlock icon to determine whether or not your site uses an SSL connection. You can also test your SSL encryption at ssllabs.com/ssltest/ to ensure it is valid.

HTTPS Recommendations

- Transitioning to HTTPS, once considered optional, is now essential for SEO as Google has made it a ranking signal. Keeping your website on a non-secure server puts you at a competitive disadvantage.

- Automatically convert any non-HTTPS visits to HTTPS through 301 redirects (e.g., redirecting http://www.yoursite.com to https://www.yoursite.com). Use crawl tools like webconfs to verify the status code of your pages.

Common Mistakes with HTTPS

- Failing to move all assets (images, CSS, JavaScript) to HTTPS, resulting in mixed content issues.

- Neglecting to update HTTPS URLs in canonical tags. Ensure both alternate and canonical tags on separate mobile websites use HTTPS URLs.

- Not including HTTPS URLs in your sitemap.xml and correcting internal links on HTTPS sites that lead to HTTP pages.

seoClarity users can use our built-in crawler to run a crawl and leverage the Parent Page report to find all instances of old internally linked http URLs at scale so they can be updated to the new HTTPs version.

#4. Mobile Friendliness

More than half of web searches come from mobile devices which is why it's crucial to ensure that all basic mobile-friendly aspects are in place at this stage of the audit.

AI Search Note: AI Search mirrors user experience patterns. If mobile UX is poor, your pages are less likely to be favored in AI rankings.

How to Audit Mobile Friendliness

Select your most important templates such as a category page, product page, and blog post. Test them with the Google Mobile-Friendly Test. Prioritize issues reported for the development team to fix.

Mobile Friendly Recommendations

To create a strong mobile SEO strategy, start by:

- Adopt a responsive web design on a single URL, although Google will still serve mobile sites to searchers.

- Ensure your pages load in under two seconds, have readable text, and quick-loading images.

Google also offers further resources on how to optimize for mobile.

Note: Google announced mobile first indexing of the entire web on their Webmaster Blog in March 2020.

Common Mistakes with Mobile Friendliness

The most common mobile SEO issues typically come from limiting the mobile experience compared to desktop. Give mobile users a full experience, not just the parts of the desktop site that work OK on mobile.

Other mobile design aspects that come up are:

- Tap targets being too close together

- Content presented wider than the viewable screen (viewport not configured).

- For separate mobile site:

- Missing annotations (aka “switchboard tags:” rel=”alternate” and rel=”canonical” to connect the URLs as equivalents) between the two sites

- A non 1-to-1 ratio between the mobile page and the corresponding desktop page

- Faulty redirects – make sure that desktop pages don’t inadvertently redirect to a single, unrelated mobile page.

#5. Page Speed

Page speed is one of the most critical factors that affect a site’s visibility in Google — and it's only grown more important with Google’s announcement of Core Web Vitals update and page experience.

In the update, the Core Web Vital metrics (which all relate directly to page speed) combine with other experience metrics to create the page experience signal.

Page speed and SEO have a direct correlation because page speed affects bounce rate and conversions! As a result, optimizing page speed and decreasing load time often deliver instant results to a company’s organic presence and improves the search experience.

AI Search Note: Fast-loading sites provide better user signals and engagement metrics, which help AI search engines rank your pages as more reliable.

How to Audit Page Speed

Use Google’s Page Speed Insights tool to evaluate key templates on the site. This data is also within the Google Lighthouse data found at web.dev.

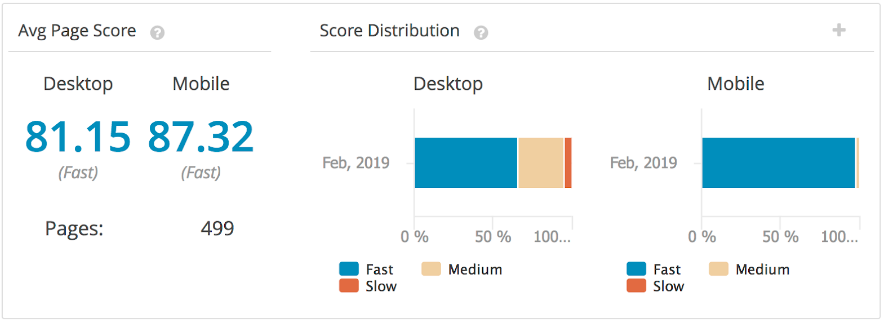

seoClarity’s Page Speed Analysis gives a handy point of view by combining all these issues across the site to prioritize the impact. It also allows you to keep track of page speed on a weekly or monthly basis, making it easier to monitor and evaluate your progress.

Page Speed Recommendations

- Try to have each web page load in less than two seconds (the typical threshold of how long the average web surfer will wait).

- Resolve the common page speed mistakes listed below.

Common Page Speed Mistakes

- Bloated images and code

- Too many render-blocking resources

- Missing cache policies that require the browser to recall the image every trip instead of caching it

- Images and CSS that can be delayed until needed but instead load as a render-blocking asset. For example, you don’t need to load that huge image in a menu flyout that only appears when the user hovers over it.

#6. SEO Tags in the Head Section

A few important <head> section tags help Google index the site properly. These exalted tags include:

- Meta title

- Meta description

- Canonical

- Hreflang for international sites.

Without these tags and clear SEO optimized meta descriptions, Google must assume where to pull content from (title and description) to create the listing, which content among duplicates should be shown to users (canonical tag), and who to show it to (hreflang).

AI Search Note: These are now “data cues” for AI engines. Well-structured metadata helps it understand your topic hierarchy.

How to Audit SEO Tags

Install the Chrome plugin Spark, or manually inspect the code on key landing pages via Inspect in Chrome to spot these tags. Assess whether key SEO tags are present in the <head> section. The Spark plugin will display the data if it’s properly coded.

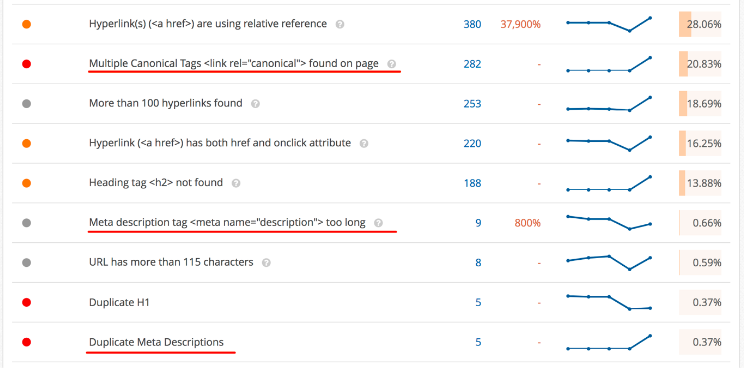

Also, as shown below, a seoClarity crawl will collect these issues. This report will show any potential issues with these tags (e.g. duplication, values that are too long, or are missing).

SEO Tags Recommendations

Utilize and configure each tag properly for every page on the site. At this stage, the tags must be present and valid on the site.

Common Mistakes with SEO Tags

-

Duplication Issues:

- Failing to use the correct canonical or noindex tags, signaling intentional duplication. For instance, multi-page articles may share the same title tag without appropriate indicators directing Google to the preferred page (like the "show all" page version).

- Omitting canonical tags on each page can lead to duplication problems, especially when tracking parameters modify URLs.

-

Incorrect Placement:

- Placing SEO tags outside the

<head>section effectively renders them unrecognized, as if they are absent.

- Placing SEO tags outside the

-

Inconsistencies in SEO Tags:

- Differences between the SEO tag values in the page's HTML (visible via View Page Source) and the DOM (accessible through the Inspect Element in browsers, showing HTML post-JavaScript execution). Consistency is crucial; discrepancies, such as unique title tags only after JavaScript runs, can lead Google to index the unoptimized HTML version instead of the enhanced post-JS version.

#7. Crawling

For search engines to index and rank a site, they need to crawl its pages first. Google, for example, releases a bot to crawl a site by executing internal links.

Errors, broken pages, overuse of JavaScript, or a complex site architecture might derail the bot from accessing critical content or use up the available crawl budget trying to figure out your site.

AI Search Note: If traditional crawlers can’t access it, AI bots can’t either. Ensure your site’s structure is transparent and link paths are consistent.

How to Audit Crawlability

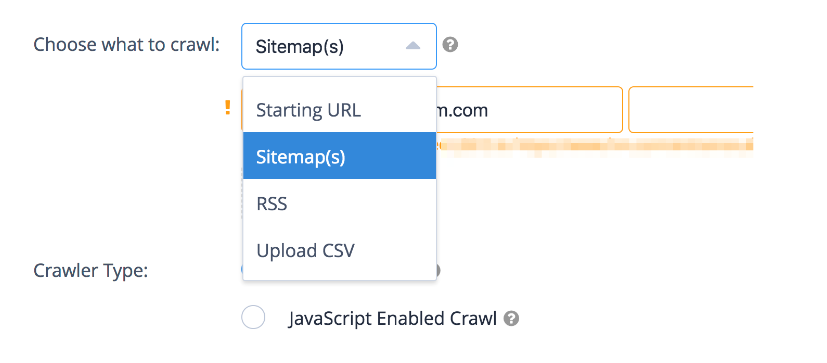

Use an SEO crawler to imitate the path taken by the search engine’s bot — an advanced crawler will replicate Googlebot and see your website as the search engine sees it.

Look for reports of crawl issues caused by unnecessary URLs, broken links, redirect chains, or incorrect canonical configurations. The right crawler will be fully customizable and allow you to set the crawl depth, speed, and frequency.

We’re proud to say that our built-in crawler allows for all of that, all with no limitations!

Google Search Console also surfaces crawling errors it has found.

Crawlability Recommendations

- Remove any hindrances in crawling the site.

- Limit the use of parameters so bots don’t have to consider if or how it impacts the content.

- Utilize links thoughtfully in the content that contextually ties the site together.

- Design the menus and hierarchy in a logical way so the relationships can be seen from this perspective.

Common Crawlability Mistakes

- Broken links, internal redirects, and "spider traps" (such as infinite calendar links) hinder effective crawling. Pages that are dead-ends to new content should carry a "nofollow" tag to guide Google towards more useful paths.

- Lack of links. Issues like mobile sites missing header menus result in orphan pages. Additionally, category pages with infinite scroll may fail to get crawled or indexed if links are not visible above the fold, since Google does not scroll. Implementing extra code or directly adding links into the HTML can address this.

Recommended Reading: SEO Crawlability Issues and How to Find Them

#8. Rendering

Rendering involves how Google views and displays your page's content and code. Adhere to progressive enhancement principles to ensure that the content and core functionality are accessible, even from a text-only browser.

As CSS and JS are executed, ensure all content remains available for Google's rendering process. Previously, Google did not process JavaScript, but now that it does, ensure Google can access all necessary files without restrictions to fully render the page like a regular user.

AI Search Note: AI needs fully rendered, readable content. Use server-side rendering or dynamic rendering for heavy JS pages.

Recommended Reading: AngularJS & SEO: How to Optimize AngularJS for Crawling and Indexing

How to Audit Rendering

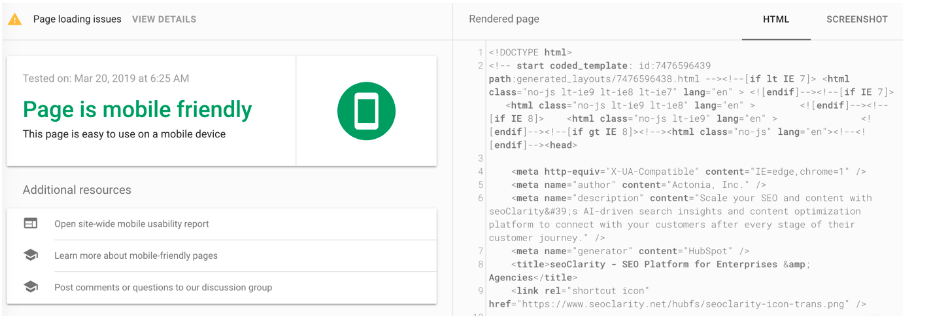

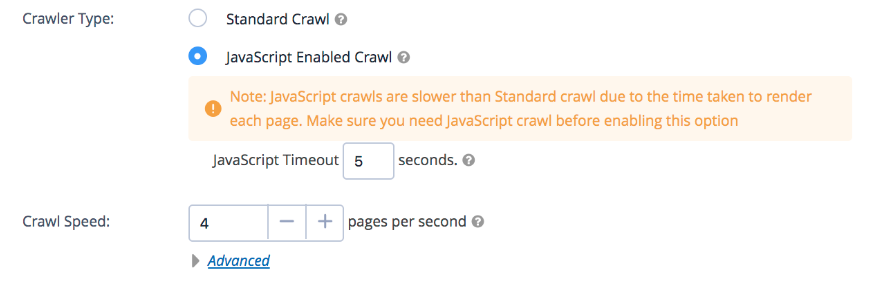

Run a site crawl with JavaScript enabled to render pages exactly as they would appear in the browser. In doing so, you’ll evaluate issues Google might encounter when rendering your pages with their JavaScript crawling capabilities.

Another way to check how well your page renders to Google is to view the cached version of a few important page templates. You can view this after searching for the page and clicking the option next to the URL to view the cached version.

Google tends to store only the HTML of websites in the cache opposed to the JavaScript executed version. If properly executed, the page should still render all important content and SEO elements in the HTML state.

Additionally, the Mobile Friendly Testing tool is known to behave as Google’s headless browser, executing the page’s JavaScript and rendering the page. This is a great SEO tool to test if anything is stopping Google from accessing the content.

Rendering Recommendations

- Utilize progressive enhancement so that SEO elements and content render in HTML. Google has a helpful resource on rendering on the web.

- Other methods like initial static rendering and pre-rendering can get the job done too. (Although these should be your second option after progressive enhancement.)

- Core principles of JavaScript can shed more light on the topic, and consider familiarizing yourself more with the dev team's priorities so you can work more effectively with them.

Common Mistakes with Rendering

- Putting content behind a user interaction. Google will render the DOM with their headless browser but won't view a JavaScript click that reveals more content. A quick way to know if Google can find your content is to search it directly in Google and see if that exact text appears on the search results page, highlighted in bold to show it matches your query.

#9. Indexing

The index is where Google stores information about pages it has crawled and where it selects the content to rank for a particular search query. Google is rapidly expanding, culling, and updating its index.

AI Search Note: AI search engines increasingly use on-demand indexing, accessing pages as needed to deliver relevant results. Ensuring your content is easily accessible and well-structured helps both traditional and AI crawlers index and interpret it efficiently.

Recommended Reading: 3 Common Search Engine Indexing Problems

How to Audit Indexation

To audit indexation, start by searching "site:domain.com" on Google to review indexed pages, which helps identify any duplicates or over-indexed content.

By navigating to the last search results page, you can access a message indicating removed duplicates, revealing consolidated pages. This step confirms the absence of crawl issues, ensuring that Google has discovered and indexed your content.

For a comprehensive view, Google Search Console offers insights on indexation levels, including a section for Excluded assets, indicating pages deemed unworthy of indexing.

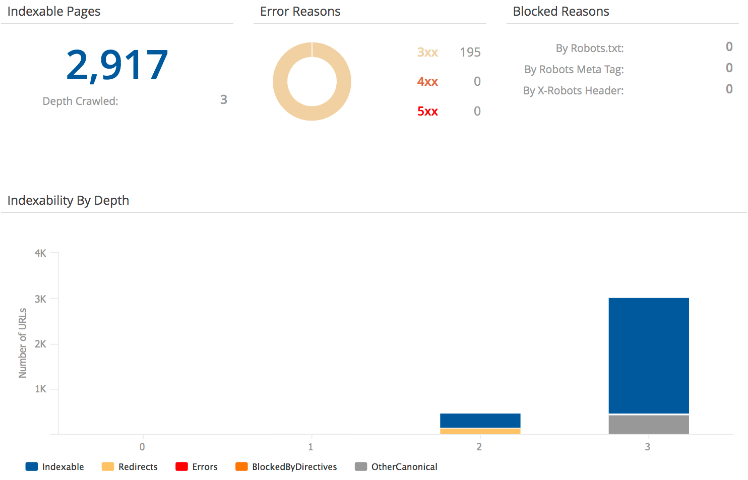

Additionally, seoClarity's Site Health report provides detailed indexability data such as:

- The total number of indexable pages on the domain,

- The number of errors as well as the reasons for them, and

- A complete list of indexable pages to help you access potentially problematic assets, evaluate and correct.

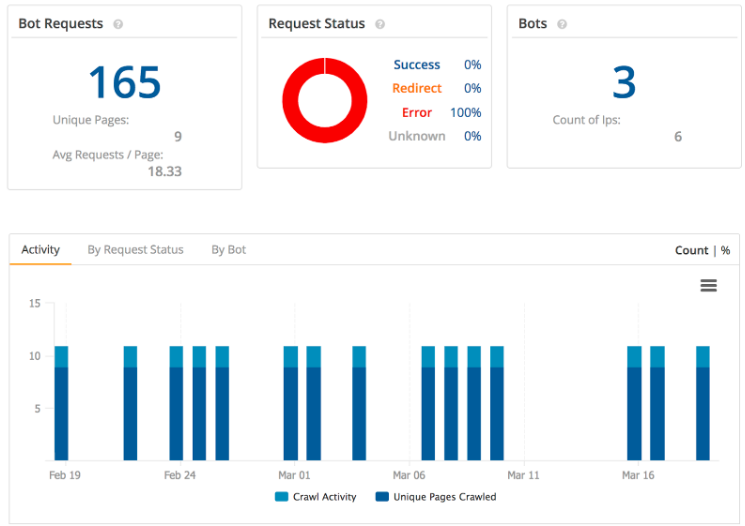

Finally, you can review bot activity on the server to see how Googlebot is crawling your site which may explain how your site is being indexed. In seoClarity, you can filter the results by response codes to identify page errors instantly.

You may find that some pages that are not getting crawled simply have no links to them. Improve internal links continuously to ensure the bot can reach pages deep in the site’s architecture as well. With non-indexed pages, check if the content is too thin to warrant indexation.

Indexation Recommendations

- The number of pages indexed after searching “site:domain.com” should be close to the number of pages on your site. If you’re a 100-page site and have a million pages indexed then there’s something off. The same is true if you're a million-page site with 100 pages indexed.

- Analytics is helpful here, too. Ideally, the number of pages indexed should closely align with the number of landing pages recorded in analytics over a year, reflecting a well-optimized website.

Common Mistakes with Indexation

Indexation issues often stem from problems with crawling and rendering. Under-indexed sites may require updates to meta tags or content to boost relevancy and convince Google of their value.

A frequent error is the accidental use of the robots=noindex tag, sometimes added to key landing pages during development updates, leading Google to de-index these pages. SEO teams must act swiftly to remove this tag and request re-indexing.

seoClarity users benefit from Page Clarity, which monitors URLs daily and alerts via email if the noindex tag appears, aiming to catch it before Googlebot does.

Additionally, faceted navigation (discussed in step 13) can significantly increase the number of pages indexed if not managed properly, potentially leading to duplicate URLs and over-indexation that weakens the visibility of crucial pages.

Focus Area: Content

#10. On-Page Optimization

This is where the SEO analysis switches hats slightly from technical-minded to content-minded. Your site is in the game, now let’s think about how it’s being played.

Specifically, how well is it optimized for relevant keywords?

Key areas to a successful content audit are how the meta tags, header tags, and body copy are being used to create a great search experience for the target keyword topics.

Before evaluating on-page SEO, conduct thorough keyword research so that you know what phrases various content assets target.

AI Search Note: AI Search looks for contextual depth, not keyword stuffing. Use semantic clusters that reflect real user intent. It represents more of a knowledge graph of related concepts and less focused on individual pages.

How to Audit On-Page Elements

Auditing on-page elements can be done in a few ways:

- Evaluate the key landing pages for on-page issues manually. If you've built your site on WordPress, some plugins act as an on-page SEO checker, making it easy for newer SEOs to see what to target. However, assessing whether a keyword is present in H1 or H2 headings or the meta description across hundreds or even thousands of pages would soon prove too cumbersome. That’s why, seoClarity offers a better option to do this at scale.

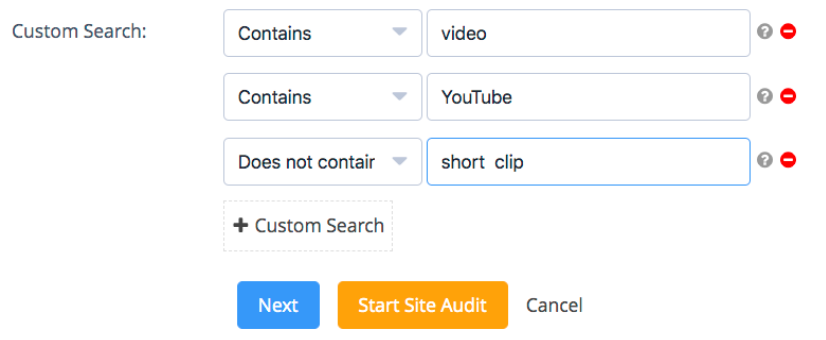

- Crawl your content to find pages on the site featuring specific words or phrases. By doing so, you'll identify common problems on pages (wrong keywords or phrases featured in headings, for example). Or audit your content at a granular level.

But you can use this capability to do so much more.

Let’s say you want to find all pages with video on them to audit their on-page optimization. Simply look for instances of words such as “video,” “YouTube,” or “short clip” to access every page featuring a video.

Recommended Reading: Finding Additional Content: Narrow in on Specific Site Features

On-Page Optimization Recommendations

-

Title Tags - approximately 70 characters, use target keyword. Learn how to run a title tag test.

-

Meta Descriptions - approximately 150 characters. When it comes to writing a good meta description, you should convince the searcher to click through the site and assure them you have their answer.

-

Headers - The “H1” tag should be a shortened version of the Title Tag, typically between 2-3 words. H2 tags should be used if they follow the format of the page. H3s and beyond are less important but should be relevant and used in order.

-

Body Content - Created to improve the search experience. Write with authority and help solve the searcher's problems using the target keywords. Find the target keywords for the page and write to them with authority. seoClarity users can leverage Research Grid to find the keywords where the ranking URLs for target keyword are also ranking.

At this stage of the audit, the goal is to spot a few quick SEO tweaks on pages where the keywords are ranking between 3-8. By doing this you can gain wins and reveal an ongoing workflow to improve these elements for the search experience.

Common Mistakes with On-Page Optimization

There are a few common issues with these elements.

- Blank meta description tags may be the most common if SEO is being overlooked.

- Generic title tags are a semi-normal occurrence for example just the product name or category name (e.g. “Hotels” or “Solutions”).

- Keyword stuffing in the page title. Including 2-3 target keywords stringed together as opposed to a brief, clear, title.

- Using marketing language instead of keywords in the headers. Searchers don’t care about your brand message, they want to know if your page has their answers.

- Not using active or unique language in meta titles and descriptions.

- Broken images and broken links in the body copy. A crawl of the site will reveal these elements.

#11. Relevant Content

Consider the information gaps of the searcher. Do you offer all the information and context needed to choose the best available product or service for their needs, beyond stating the keyword?

Do you help them learn about the product or service and make a well-informed buying decision? Can they see what is unique about your offering or information? This is relevant content that captivates your target audience.

AI Search Note: AI prefers content that answers “why” and “how,” not just “what.” Build depth and clarity into every page.

How to Audit Relevance

Start by analyzing the top-ranking sites for your target keyword on Google. Investigate why they are ranked highly, focusing on what sets them apart and how they provide value.

For example, they might provide supplementary content beyond the target keywords, or incorporate engaging elements like videos and images that enhance the user experience.

Providing relevance is all about considering the contextual aspects of the user experience.

Users of seoClarity have access to an extensive database of over 30 billion keywords, allowing them to align their content strategy with actual user intent. Tools like Topic Explorer help identify key topics and search trends, enabling the creation of highly relevant content that meets user needs and stays ahead of the competition.

Relevance Recommendations

Create a great search experience for your target audience. The best search experience is unique and true to your brand and includes well-written content and a sound site structure.

We’ve put together a complete framework for this that we like to call search experience optimization.

Common Mistakes with Relevance

- Keyword stuffing

- Not considering what the audience wants to learn

- Lack of semantically related keywords in body content.

#12. Structured Data

Structured data from schema.org allows webmasters and SEOs to add semantic context to website code, enhancing how Google displays search listings with details like telephone numbers, reviews, star ratings, and event information.

This enrichment helps attract user attention and can increase organic click-through rates. However, for structured data to be effective, it must be correctly implemented.

During an audit, it's crucial to review the markup for errors and strategically plan the optimal schema for key website templates.

AI Search Note: Because schema is standardized as a universal machine readable format, it’s recommended to leverage schema to help AI engines identify and interrupt the context of your pages and avoid misinterpretation of your content when synthesizing information.

How to Audit Structured Data

Use the Google’s Rich Results tool to evaluate your schema markup and its eligibility to appear as a rich snippet on the SERP. Just grab a few important pages and enter them in.

Google Search Console also reports on potential issues with Schema under the Enhancements section (it shows if you have markup added to the site). It shows errors, warnings, and the number of valid URLs in total.

While Google’s tools can get the job done, you’d have to go page by page — which isn’t feasible for an enterprise site! Use an SEO platform to audit schema at scale.

More on that here: Auditing Schema Markup: Confirming Structured Data's Implementation.

Schema Recommendations

- Prioritize schema that will generate Rich Snippets such as breadcrumbs and videos.

- Check out the Rich Snippet Gallery from Google to see which Rich Snippets would make sense for your site.

- Product markup is great for e-commerce sites to achieve star ratings and reviews.

- Other examples include Event schema to achieve extra space on the search results so searchers can go directly to your event page.

- Review managed keywords for universal ranking types to note which competitors might be using Schema to enrich their listings.

- Install using the JSON-LD method. It's recommended by Google and much easier for developers than coding the schema.org code in line (i.e. the Microdata method).

seoClarity’s Schema builder is a Chrome plugin that makes it super easy to apply structured data to your site. Try it now for free below!

Common Mistakes with Schema

Not including all of the essential data is a common mistake with schema. Audi tools will flag issues as “required” or “recommended” to help you prioritize the fixes.

Sometimes developers won't include all the information in the tag. After checking a URL with the Google Structured Data Tool, take a moment to read through the values to make sure everything is complete.

Don’t abuse structured data markup. Google is much more aware of Structured Data manipulation these days and will happily apply a manual action if they feel you are spamming them.

The many ways you can trigger a manual action from Google include:

- Marking up content invisible to users

- Marking up irrelevant or misleading content

- Applying markup site-wide that should only be on key pages

Recommended Reading: 7 Common Issues with Implementing Structured Data

#13. Faceted Navigation

Faceted navigation enhances e-commerce sites by allowing the creation of specific sub-categories,

For example, different colored Chicago Bulls hats. While a general category page covers searches for "Chicago Bulls Hats," faceted navigation enables the creation of tailored pages like "White Chicago Bulls Hats," helping users who know their preferred color to directly access what they need, streamlining their shopping experience.

However, this feature can also lead to SEO challenges. If each filter generates a new URL without substantial demand, it can result in Google crawling and indexing duplicate content or unnecessary URLs.

Therefore, while faceted navigation is user-friendly and beneficial for filtering specific products, it's crucial to manage these pages to prevent the indexing of redundant content by Google.

AI Search Note: Too many dynamic URLs confuse AI crawlers. Keep filters crawlable but controlled to help AI to easily comprehend your content quickly. If AI crawlers have to interpret the connections from each page, it has to stitch the data together. For example, a long page of products would make it easier for AI bots to access and comprehend the data and reduce errors.

How to Audit Faceted Navigation

Search different filters and product categories in Google to see if your pages show up in the index. Check to see if you’re creating too many pages by finding the URL pattern of how your site creates the pages, e.g. searching “site:wayfair.com inurl:color=”

Faceted Navigation Recommendations

Utilizing faceted navigation brilliantly may be the single thing separating the top sites from the pack. Only pages that align with search volume are offered to be indexed and all of the key On-Page elements mentioned above update to the long-tail target.

- Work with your e-commerce platform to find the right way to implement faceted navigation.

- Review third party tools such as Searchdex, YourAmigo, and Bloomreach to learn more about their offerings to help create this setup on your site and provide a great long-tail search experience for your searchers.

It's essential that each faceted navigation URL functions as a standalone entity, complete with a unique URL, title tag, description tag, and H1 tag, effectively treating each as if it were a top-level category page.

Common Mistakes with Faceted Navigation

Every possible combination of facets is typically (at least one) unique URL, faceted navigation can create a few problems for SEO:

- It creates a lot of duplicate content, which is bad for various reasons.

- It eats up valuable crawl budget and can send Google incorrect signals.

- It dilutes link equity and passes equity to pages that we don’t even want indexed.

To mitigate these issues, consider the following strategies:

- Keep category, subcategory, and sub-subcategory pages discoverable and indexable.

- Allow indexing only for category pages with one facet selected; use "nofollow" links on multi-facet selections.

- Add a "noindex" tag to pages with two or more facets to prevent indexing even if these pages are crawled.

- Choose which facets like "color" and "brand" could benefit SEO and ensure they are accessible to Google.

- Correctly set up canonical tags to avoid pointing them to unfaceted primary category pages if you intend for faceted URLs to be indexed.

- Avoid creating multiple versions of the same faceted URL; ensure canonical tags point to a single version for targeted SEO.

- Include faceted pages in your XML sitemap to explicitly indicate to Google that these URLs are intended for indexing.

A thorough site crawl can identify these issues, revealing whether the appropriate tags are applied to match search demand and ensure effective SEO for faceted navigation.

#14. Accessibility

A website should support people with physical, cognitive, or technological impairments. Google promotes these principles within its developer recommendations.

For SEO, accessibility issues are a combination of rendering issues and relevance laid out above. Google describes accessibility to “mean that the site's content is available, and its functionality can be operated, by literally anyone.”

AI Search Note: Accessible sites are cleaner semantically. That same structure helps AI interpret and prioritize content more accurately.

How to Audit Accessibility

The Google Lighthouse Plugin (web.dev) does a great job of outlining accessibility issues. It flags items in the code such as missing alt text on images or names on clickable buttons. It will also look for descriptive text on links (no “click here”).

These elements help bring the site to life for those using screen readers and help Google understand the web at scale.

Be on the lookout for generic or missing link names, missing alt tags, and headers skipping order (e.g. an H2, without an H1).

This is a great opportunity to teach the team about these issues and create an accessibility standard for the site.

If you use a crawler like seoClarity’s Clarity Audit, you’ll get notifications on those issues in the site crawl report as well.

Accessibility Recommendations

- Strive for the basics such as alt tags, relevant names on links and buttons, and headers in logical order. These are likely also used by Google to consume the website.

- Some portion of Google's intake on websites comes from Quality Raters instructed to imagine searching for a term with a specific intent, land on a given website, and evaluate its quality. SEOs typically have this eye as well, and if something jumps out that gives a critically poor search experience then it can be added here within an SEO audit.

- For example, content hidden behind multiple tabs or is poorly written, translated, or outdated counts. Sites with a poor site structure, confusing re-directs, or that lock you into a geo-experience are accessibility issues.

#15. Authoritative Content

Authoritative content is unique to a brand and showcases their expertise on a topic. This works to build trust with the target audience.

Give your content a hard look. It should include original facts and research, answer the reader's search query, provide value, and be well-written.

This is also the step where you evaluate the topic clusters for SEO, interlinking your content with blog posts and resources that cover sub-topics.

AI Search Note: AI search weighs E-E-A-T (experience, expertise, authority, trust). Consistent, cited, and original content wins.

How to Audit Your Content for Authoritativeness

Evaluate how the site is targeting “awareness” keywords by filtering Google Search Console queries containing words like “how” (seoClarity users can do it using Search Analytics). Evaluate rankings and performance and look for low-hanging fruit (pages ranking between positions 11-40, requiring a little push to appear on page one.).

This is also a good step to find content gaps — areas where your competition ranks but you don’t — and create more authoritative content to fill them!

To find them, look for keywords where three competitors are in a prominent position but you aren't.

You should also conduct research to determine keyword opportunities at different intent stages related to the target keyword. For example, if you’re selling running shoes, an article on “how to choose the best running shoes” and “tips for running a marathon” could expand your authority for the primary terms (running shoes) and move searchers down the funnel without leaving your site.

Authority Content Recommendations

- Produce content about your target keyword as if you were a media outlet assigned to covering it. Cover your “beat” with content like new innovations, best practices, and biggest mistakes. Interact with your community to gain feedback on your content. Share it on social media, and update it with new information.

- Deliver upon the correct searcher intent, analyze the SERP to understand applicable search features, review top-ranking content, and set specific goals.

- seoClarity users can leverage tools such as Content Ideas that will pull in the top People Also Ask and other sources to generate content ideas. Find more on creating content for SEO.

#16. Off-Page SEO

Off-page analysis is a look at everything happening off the website that impacts SEO (i.e. external links).

The quality and quantity of relevant websites sharing and linking to your content is a good sign that your content is worthwhile.

AI Search Note: Mentions and baklinks act as validation signals in AI models. High-quality brand mentions and content serve as external validation for AI search engines.

How to Audit Off-Page SEO

- Identify your top two competitors.

- Run them and your site through a backlink network such as Majestic to see the top linking sites. seoClarity users have access to the Majestic link network through their subscription.

- Review the top links pointing to your competitors.

- Break down where they came from, e.g. sponsorship, resource creation, media mentions, viral content.

- Be on the lookout for links related to your most important keywords.

- Do the same for your site to understand where your links are coming from.

- Look at your analytics to see your referring sites - the best links are the ones that drive traffic because it's a path on the Internet that real people found useful. Google’s innovations around off-page analysis will correspond with links that get traffic because they indicate quality.

After this review of backlinks, you’ll have some specific targets and a good understanding of what drives linking in your industry.

Off-Page Analysis Recommendations

- For your link profile, look for at least 75% of your links pointing to a page other than your home page.

- Look for diverse anchor text with natural links to many sources, i.e. if all your links are to your home page with your brand as the anchor text you’re probably only getting low-quality directory listing links.

- Develop a plan to grow your referral traffic through strategic linking. Participate in industry forums and websites.

- Fix broken links and redirect content to maintain the link path for users.

My favorite link-building tactics are the skyscraper technique and content outreach methods.

After auditing your backlinks, you can develop a plan on how to incorporate these tactics to best your competitors.

Common Mistakes with Off-Page SEO

The biggest mistake SEOs make with off-page tactics is doing outreach without researching the specific value that may be brought to the person they're contacting.

Skipping the competitive research part, which can reveal invaluable nuggets on what is driving their off-page success is another misfire.

Conclusion

Congratulations! You made it through a site audit. Doesn’t that feel great?

You are now an expert on how to tactically improve your website and perform an SEO audit. When performing this site audit in the future or across other sites, remember:

- Prioritize your site audit tasks in order of impact and level of effort in a grid.

- Address the high-impact, low-effort items first and work from there.

- Show progress on where it is paying off. For the areas you scored “Needs Improvement,” determine the appropriate Standard Operating Procedures and Workflows to train your digital marketing team to set a new standard (Pro-tip: we have a great library of workflows and SEO resources over on our blog!).

Over time, as you continue to address these areas on your site, you’ll see the “Needs Improvement” notes turn into your strengths as you see the SEO performance improve.

Not sure if you’ve remembered it all? Bookmark our convenient, free site audit checklist to guide you through each step of the process.

1 Comment

Click here to read/write comments