A website built with AngularJS (or other single-page applications like React or Vue) allows developers to easily set up API calls to render content per template.

No longer do they need to write HTML or JavaScript—it renders for them via Angular.

Having a single-page application means almost instantaneous loading time—not to mention, easier development and fewer code errors to fix.

While this may seem like a developer's dream, it can actually be an SEO nightmare.

The Angular SPA by default serves content on the client side. Because of that, they strip a page from all the elements Google needs to crawl and index to rank a web page in the search results.

Should AngularJS pages be left on the site unoptimized?

IMPORTANT: One thing to note is that long-term support for AngularJS has ended. Sites still running Angular.js are highly encouraged to upgrade to Angular.

With that said, in this article, we'll cover the following:

- What is Angular for SEO?

- Why Use Angular?

- Is Angular Search-Friendly?

- Optimizing Angular Applications for Google Crawling

- How to Prepare for Server-Side Rendering with the Angular Universal Extension

What is AngularJS?

AngularJS is a JavaScript-based platform/web application from Google that enables loading content from a single page (similar to AJAX).

The definition can sound a bit cryptic to anyone unaccustomed to coding and web technologies, so let's break it down to understand it better.

JavaScript-based platform: Websites are built with code. Commonly, the three key languages used in the process are:

- HTML defining the content on the page,

- CSS used to style and position the content and other page elements, and

- JavaScript (commonly abbreviated as JS), a programming language used to personalize the interactive experience on the site.

One major reason why websites utilize JS is that the code is easier to packet or re-use than standard HTML/CSS pages.

With the focus on the user experience increasing over the years, adding JavaScript functionality can take your site from quite boring to exciting with layers of functionality, including opportunities to display image carousels, interactive banners, zoom option for product visuals, or other elements users have grown so accustomed to on modern websites.

JavaScript is also easier to scale and allows webmasters to add or update improved experiences at a more rapid pace.

Enter Angular, a Google-powered JavaScript framework that adds interactivity to web pages with a twist.

Recommended Reading: Dynamic Rendering and SEO: How to Help Search Engines (and Users) on Your Website

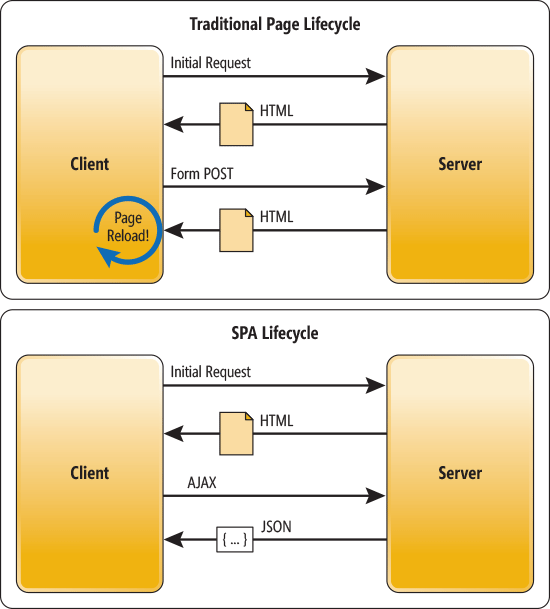

An Angular-based website doesn’t store individual URLs as separate files. Instead, it loads the content users request on the client side via a single application. Content rendered asynchronously or AJAX functions in a similar manner.

This means that instead of loading a new page to display different content, it just replaces it on the same page.

That's why these web properties are often called Single Page Applications (SPAs).

In practical terms, the difference means that, instead of a new rendered page every time a user requests information, all interaction with the content happens on the same page through AJAX calls.

Here’s a visual from Microsoft that illustrates the difference. Note how, in a traditional page cycle, server loads a new HTML page with every request.

In SPA lifecycle, however, the page loads only once. JavaScript then loads relevant content using the page as a framework.

Why Use Angular?

Building websites with the Angular framework has three main benefits:

- Content loads much faster on an SPA since there is no need to load a new HTML code every time. This results in a far better user experience.

- It also speeds up the development process. A developer has to build only one page, but they control the rest with JS afterward.

- As a result of the above, developers make fewer mistakes, which results in fewer users who encounter problems when browsing the site. Technical teams don’t have to spend time revisiting their code to fix mistakes. A win-win scenario for everyone.

Unfortunately, however, Angular presents some major challenges for SEO.

Looking to perform a full technical site audit?

Use this free site audit checklist to guide you through each step of the process, including the information covered in this post.

Is Angular Search Engine-Friendly?

To answer this question, we must discuss how Google renders JS in the first place. The process essentially takes two steps:

- First, the search engine indexes the HTML content on the page as is, without the JavaScript element.

- Then, it renders whatever JS content is on the page and indexes it accordingly.

In order for this to happen, the standard Googlebot crawler will visit the URL. It then has to add the URL into a queue which may take 2-4 weeks for the crawler to return to that URL, then – utilizing the Chrome browser crawler – visit the page and render the page using JavaScript.

While this method is effective for Google to be able to crawl your site's content, the process is elongated and changes may not be detected for a couple weeks to a month.

This, combined with the nature of AngularJS, creates some challenges.

Recommended Reading: JavaScript and Google Indexation: Test Results Reveal What Works for Search

For one, by calling page content via API connections, the single-page technology removes all crawlable content from the page’s actual code.

The time frame for the API connections to populate content within the DOM becomes important, as you want to ensure that is the threshold for Google to view the content.

Unlike a traditional HTML page containing all website content, an SPA will only include the basic page structure. The actual wording, however, appears through a dynamic API call.

For SEO, the above means that a page contains no actual HTML in the source code. As a result, all the elements Google would crawl are not there. If you were to view the source code or review a Google cache during this time frame, what you will see is a blank page.

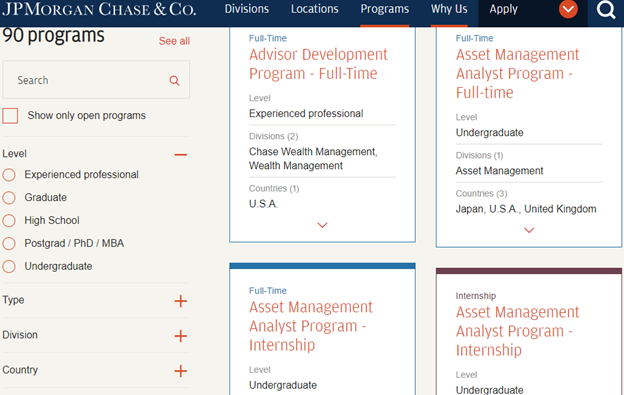

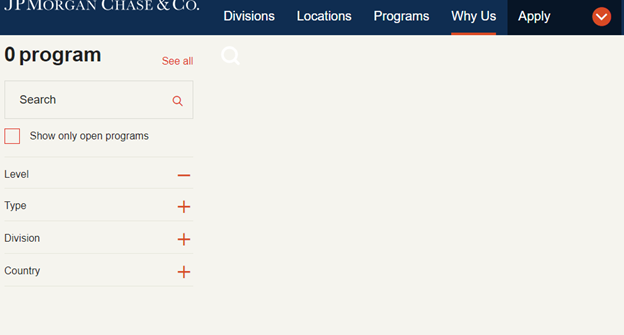

Moreover, search engines cannot cache SPAs either. The two visuals below illustrate this problem well. The top one shows what a user sees when visiting an Angular page.

The other presents the actual content Google can access and crawl.

What the user sees:

What Google caches:

A stark difference, isn’t it?

For users, the page works like any other. They can navigate the site easily. They can access, read, and interact with the information.

Google, on the other hand, sees hardly anything on the page. Certainly, not enough to even index it properly.

Tip: Use Google Search Console to see your webpages like Googlebot sees them.

That’s the challenge SEOs face with Angular. Such applications lack everything that’s needed to rank them well.

Luckily, there’s good news, too.

Optimizing Angular Applications for Google Crawling

There are a couple ways for SEOs to ensure that your site can be crawled cleanly.

Use Google CodeLab and #PushState to Change the URL

Google's CodeLab walks developers through the most important SEO elements that will need to render on a page.

This includes utilizing #PushState and the History API to change the URL, adding in important elements on-page or via the DOM (meta title, descriptions, canonical tags, etc.), making sure all internal links are shown within the code base (and not as on-click events), and dynamically loading in content.

The biggest issue with this method is that the time to render the content may be higher. And while this will load some of the necessary elements, it's not always easy to ensure that the content also appears on-site to its fullest ability.

Also, if you utilize this method then you also need to customize your analytics metrics to make sure it is also recording each visit as a unique visit and page view.

Server-Side Rendering with Angular Universal

A viable option, however, involves using Angular Universal extension to render content server-side. When the page loads the server-side content (HTML) renders, thus creating a version that is accessible by Google and other search engines.

There are services including Prerender.io, that will create cached versions of the content and render it in a form that Googlebot can crawl and index.

The downside of utilizing Prerender.io is that there are costs added both to create the render and then also based on the amount of pages that you have on your site, the cost can escalate pretty quickly.

Newer services like huckabuy.com offer to create a static pre-render of your site that is already enhanced with schema markup for SEO. Google also offers a solution using Renderton which is an open-source Codelab based on headless Chromium.

Pre-rendering offers the search engines an HTML snapshot so they can understand your page. However, the one issue with pre-rendering your site is that Google can deprecate it easily, leaving the site without an indexable solution again.

However, most experts in the industry believe that Google will continue to support this option and actually may list it as its preferred method since it would allow them to speed up the process of crawling and rendering site content.

In Google's documentation, they have updated their recommendations to include dynamic rendering as a solution.

How to Prepare for Server-Side Rendering with the Angular Universal Extension

Below is a brief overview of the process from the Angular’s official site. Before going through the process, though, I recommend you move to a controlled testing environment.

The Process:

-

Install dependencies.

-

Prepare your app by modifying both the app code and its configuration.

-

Add a build target, and build a Universal bundle using the CLI with the @nguniversal/express-engine schematic. Note: CLI stands for command-line interface.

-

Set up a server to run Universal bundles.

-

Pack and run the app on the server.

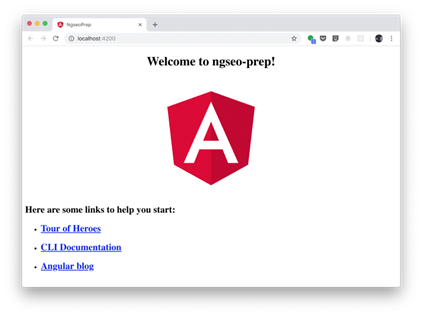

The result? A cached page that looks like this:

Granted, it’s not visually striking. A user wouldn’t see it, though. The search engine, however, would find all the information it needs to crawl and index the page properly, which is exactly your objective.

Client Results

When a client made the move to AngularJS, this created an issue in terms of overall search visibility. Our team provided a remediation strategy to ensure the site was visible to search engines.

The team continued to have issues and needed steps for execution to boost competitive visibility, traffic, and revenue from organic search.

Besides the remediation strategy, our team QA'd the execution by their internal teams with static site rendering, and the client saw a 54% increase in #1 rankings and 43% increase in top 3 rankings within the first month post-launch.

Another client had just implemented AngularJS and began to see their site traffic rapidly decline 25% YoY as a result. The client implemented dynamic rendering of page titles, meta descriptions, and H1 tags with pre-render I/O caching.

Our team provided a remediation plan and QA'd the execution. Top 3 rankings grew 55% and Top 10 grew 29% 90-days post-launch. Overall traffic grew 34% YoY 90-days post-launch.

Key Takeaways

Angular offers incredible opportunities to improve user experience and cut development time. Unfortunately, it also causes serious challenges for SEO.

For one, SPAs contain no code elements required to have the content crawled and indexed for rankings.

Luckily, SEOs can overcome it with these options:

-

Use dynamic rendering with a platform, like Prerender.io or Huckabuy.

-

Creating an Angular and HTML hybrid called Initial Static Rendering.

- Use Google CodeLab to add the essential items for indexing.

-

Using Angular Universal extension to create static versions of the site for crawling and indexing.

For Angular specifically, Angular Universal may offer the optimal solution. For sites that want to continue to enhance their site and not be restricted to Angular alone, then dynamic rendering offers the best solution.

Whichever method you choose, you will want to work through the process hand in hand with a development team with experience in working with clients and their websites.

Our team at seoClarity is well-versed in making recommendations and providing QA testing for teams regardless of the solution.

Editor's Note: This post was originally published in March 2019 and has been updated to reflect industry trends.

3 Comments

Click here to read/write comments