SEO Crawl Budgets:

Monitoring, Optimizing, and Staying Ahead

The term crawl budget defines the number of pages a search engine bot will crawl and index on a website within a specific time frame.

A site's crawl budget plays a critical role in its overall SEO success.

Various crawl-related problems can hinder the search engine’s ability to reach a website’s most critical content before the allocated crawl budget is up.

The result? Google never discovers those content assets or realizes they’ve been updated recently.

In this guide, you’ll learn everything there is to know to prevent this from happening on your site.

We’ll discuss, specifically:

Finally, at the end of this guide, we also answer some of the most frequently asked questions about crawl budget.

But let’s start with some background information.

The internet is a vast universe. It’s practically impossible to comprehend the breadth of websites, pages, and other online assets.

This vastness creates a challenge for search engines as the web is far too big for them to crawl and index everything.

At the same time, search engines like Google must keep their index fresh and ensure that it includes all of the important pages and content.

As a result, search engines face certain tough decisions when indexing content. They have to:

All of those decisions apply limitations to how search engines crawl individual websites.

Now, we admit that this is not an issue for a small site. It's doubtful that Google would omit any pages on a typical small business website with tens of pages only.

The situation is different for an enterprise web entity with millions of pages (if not more). Such a website will, most likely, face issues with getting all that content indexed.

That’s where optimizing for crawl budget comes in handy.

With consistent monitoring and optimization, an enterprise website can ensure its crawl budget is maximized so that its most critical content gets crawled and indexed.

Let’s see how this is done, typically, starting from the beginning.

A crawl budget is a predetermined number of requests for assets a crawler will do on a website. A site's crawl budget is determined by the search engine, and once it is up, the crawler will stop accessing content on the site.

Crawl budget isn’t the same for every website as search engines use a wide range of criteria to determine how long a crawler should spend on a given web entity.

As with many things related to Google’s algorithm, we don’t know all of those factors. However, we know of a few:

As a best practice, the number of requests a crawler needs to make to access all of the site’s content should be lower than the crawl budget.

Unfortunately, this isn’t always the case, which leads to serious indexation problems.

Recommended Reading: How to Increase Crawl Efficiency

So far, we’ve considered the crawl budget from your point of view - a website owner or marketer, tasked with increasing search visibility. But the budget affects the search engine in many regards too.

According to Google’s own Gary Illyes, for Googlebot, the crawl budget is comprised of two elements:

Leaving its technical aspects aside, crawling a site works quite similarly to having it visited by a human user.

Googlebot requests access to various assets - pages, images or other files on the server - similar to how a web browser does when operated by a user.

As a result, crawling uses up server resources and bandwidth limits allocated to the website by its host.

Too much crawling, therefore, can have a similar effect as suddenly having bouts of visitors landing on your site at once. Simply put: it can break the site, slowing down its performance or overloading it completely.

Crawl rate prevents the bot from making too many requests too often and disrupting your site’s performance.

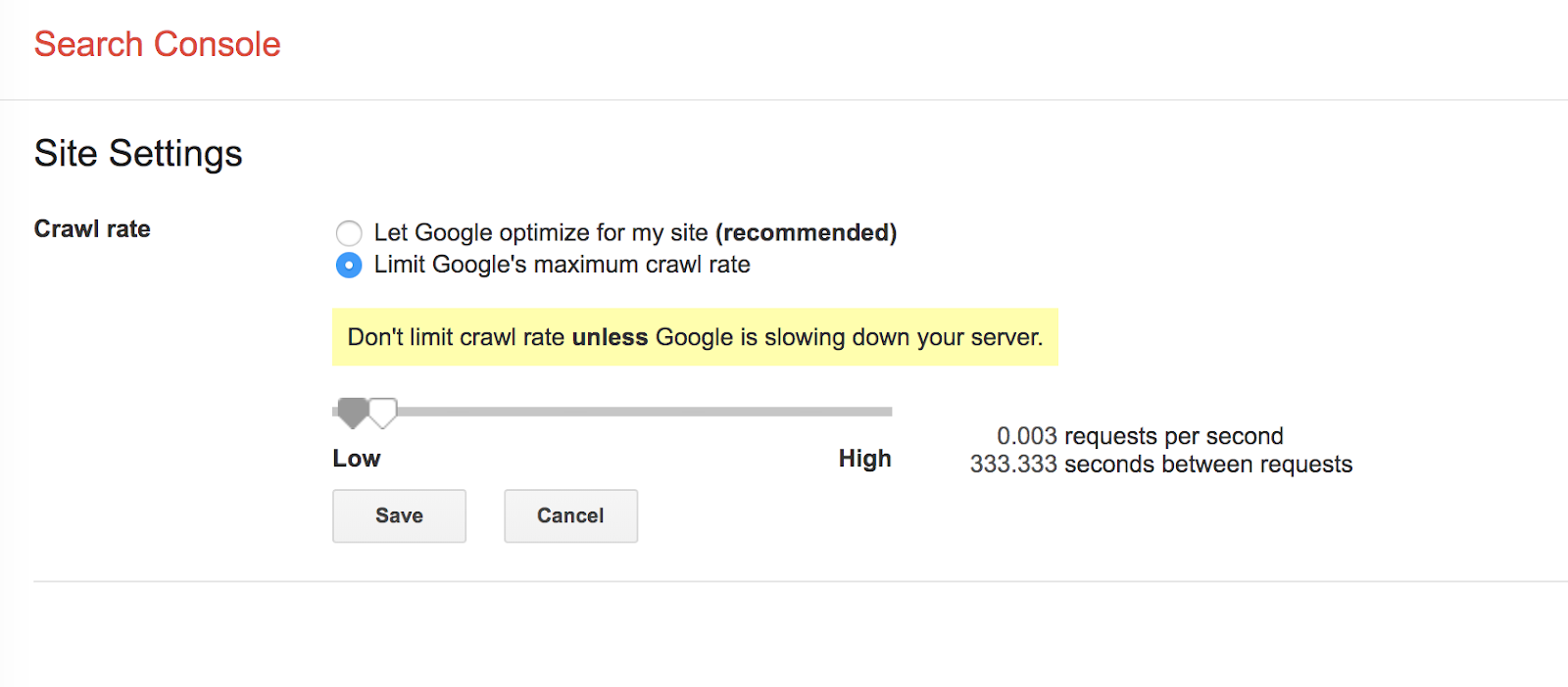

Now, Google lets webmasters decide their site's crawl rate through Google Search Console.

With this functionality, the company can suggest to the crawler at what rate it should access the site.

Unfortunately, there are drawbacks to doing so manually. Setting the rate too low will affect the frequency at which Google discovers your new content, and setting it too high can overload the server.

In fact, the site might suffer from two issues:

Unless you’re absolutely sure, we recommend leaving the process of optimizing the crawl rate to Google. Instead, focus on ensuring that the crawler can access all critical content within the available crawl budget.

If there’s no demand from indexing, there will be low activity from Googlebot, regardless of if the crawl rate limit isn’t reached. Crawl demand helps crawlers determine whether it's worth accessing the website again.

There are two factors that affect the crawl demand:

The goal here is to have your site crawled properly without creating potential issues that would negatively impact user experience.

According to Google, the biggest issue that affects crawl budget is low-value URLs.

Having too many URLs that present little or no value, yet are still on the crawler’s path, will use up the available budget and prevent Googlebot from accessing more important assets.

The problem is that you might not even realize that you have many low-value URLs as many are created without your direct impact. Let’s see how that typically happens.

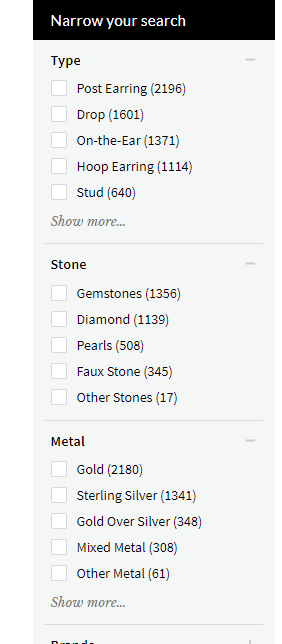

Faceted navigation refers to different ways users can filter or sort results on a web page based on different criteria. For example, any time you use Ross-Simons’ filters to fine-tune the product listing, you use faceted navigation.

Although helpful for users, faceted navigation can create issues for search engines.

Filters often create dynamic URLs which, to Googlebot, might look like individual URLs to crawl and index. This can use up your crawl budget and create a lot of duplicate content issues on the site.

Faceted navigation can also dilute the link equity on the page, passing it to dynamic URLs that you don’t want indexed.

There are ways to overcome this:

Add a “nofollow” tag to any faceted navigation link.

This will minimize the crawler’s discovery of unnecessary URLs and therefore reduce the potentially explosive crawl space that can occur with faceted navigation.

Add a “noindex” tag to inform bots of which pages are not to be included in the index.

This will remove pages from the index, but there will still be crawl budget wasted and link equity that is diluted.

Use a robots.txt disallow. For URLs with unnecessary parameters, include a directory that will be robots.txt disallowed. This lets all search engines freely crawl URLs that you want a bot to crawl.

For example: we could disallow prices under $100 in the robots file.

Disallow: *?prefn1=priceRank&prefv1=%240%20-%20%24100

Canonical tags allow you to instruct Google that a group of pages have a preferred version. Link equity can be consolidated into the chosen preferred page utilizing this method. However, the crawl budget will still be wasted.

Similarly, URL parameters - like session IDs or tracking IDs - or forms sending information with the GET method will create many unique instances of the same URL.

Those dynamic URLs, in turn, can cause duplicate content issues on the site and use up much of the crawl budget, even though none of those assets are, in fact, unique.

A "soft 404" occurs when a web server responds with a 200 OK HTTP status code, rather than the 404 Not Found, even though the page doesn’t exist.

In this case, the Googlebot will attempt to crawl the page, using up the allocated budget, instead of moving on to actual, existing URLs.

Unfortunately, pages that have been hacked can increase the list of URLs a crawler might attempt to access. If your site got hacked, remove those pages from the site and serve Googlebot with a 404 Not Found response code.

Seeing hacked pages isn’t anything new to Google and the search engine will drop them from the index promptly, but only if you serve it a 404.

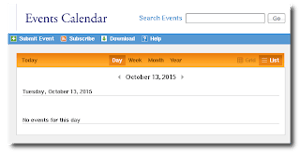

Infinite spaces are near-endless lists of URLs that Googlebot will attempt to crawl.

Infinite spaces can occur in many ways - but the most common include auto-generated URLs by the site search.

Some sites list on-site searches on pages which leads to the creation of an almost infinite number of low-value URLs that Google will consider crawling.

Another common scenario is displaying a calendar on a page with a “next month” link. Each URL will have this link, meaning that the calendar can generate thousands of unnecessary infinite spaces.

Google has suggested methods to deal with infinite spaces, such as eliminating the entire categories of those links in the robots.txt file. Doing so will restrict Googlebot from accessing those URLs from the start and save your crawl budget for other pages.

Google has suggested methods to deal with infinite spaces, such as eliminating the entire categories of those links in the robots.txt file. Doing so will restrict Googlebot from accessing those URLs from the start and save your crawl budget for other pages.

A broken link is a link that points to a page that does not exist. It may happen because the wrong URL in the link or the page has been removed but the internal link pointing to it has remained.

The broken and redirected link points to a non-existing page with a redirect, often in a series of redirect jumps.

Both issues can affect crawl budget, particularly the redirected link. It can send the crawler through a redirect chain, using up the available budget on unnecessary redirect jumps.

To learn more about URL redirects, visit our guide: A Technical SEO Guide to URL Redirects.

Site speed matters for the crawl budget too. If the load time is too long when Googlebot tries to access a page, it might give up and move to another website altogether.

A response time of two seconds results in a greatly reduced crawl budget on the site and you'll likely see the following message:

"We’re seeing an extremely high response-time for requests made to your site (at times, over 2 seconds to fetch a single URL). This has resulted in us severely limiting the number of URLs we’ll crawl from your site, and you’re seeing that in Fetch as Google as well."

Alternate URLs defined with the Hreflang tag can also use up the crawl budget.

Google will crawl them for a simple reason: the search engine needs to ensure that those assets are identical or similar, and are not redirecting to spam or other content.

In addition to HTML content, CSS or JavaScript files also consume crawl budget. Years ago, Google wasn’t crawling these files, so it wasn’t that big of an issue.

But since Google started crawling these files (especially for rendering pages for things like where the ads appear on the page, what is above the fold, and what might be hidden) many people still haven’t put time into optimizing them.

The XML sitemap plays a critical role in optimizing the crawl budget. For one, Google will prioritize crawling URLs that are included in the sitemap over the ones it discovers when crawling the site.

But that doesn’t mean that you should add all pages to the sitemap. Doing so will result in Google prioritizing all content, and wasting your crawl budget on accessing unnecessary assets.

More and more websites launch AMP versions of their content. In May 2018, there were over 6 billion AMP pages on the web and the number has certainly grown significantly since then.

Google has confirmed already that AMP pages consume crawl budgets too since Googlebot has to crawl those assets as well.

It does so to validate the page for errors and to ensure that the content is the same between the regular page and its AMP counterpart.

Based on the information above, you can see how severe issues with your site's crawl budget can be. The good news is that you can also maximize the time crawlers allocate for your website.

There are some generic things that help such as improving overall site speed, avoiding duplicate content, eliminating broken pages, or simplifying the site’s architecture.

However, below are other factors you should optimize to save the crawl budget from going to waste.

As previously stated, the key to optimizing for the crawl budget is ensuring that the number of crawlable URLs doesn't exceed the budget. Otherwise, Google will cut off crawling the site once the budget is up.

But with fewer URLs to crawl than the allocated number of requests, you stand a much greater chance for crawlers to access all your content.

You can achieve this in many different ways, but here are some of the most common approaches:

Any broken link or redirect is a dead-end for Googlebot. When it comes to broken links, the crawler might consider that there is nowhere else to go and move to another website. With the redirects, it can travel through some hops. However, even Google recommends not to exceed five hops, otherwise, the crawler will move on.

To avoid those issues, ensure that all redirected URLs point to the final destination directly, and fix any broken links.

Removing any links to 404 pages also optimizes the crawl budget usage. As the website gets older, it's a higher risk to have internal links on your site that point to URLs that are no longer active.

We’ve talked about the issue of faceted navigation already. You know that filters on a page can create multiple low-value URLs that use up the crawl budget.

But that doesn’t mean that you can’t use the faceted navigation. Quite the contrary. However, you must take measures to ensure that crawlers don't try to access dynamic URLs that the navigation will create.

To solve this faceted navigation conundrum, there are a few solutions that you can implement depending on what parts of your site should be indexed.

"Noindex" tags can be implemented to inform bots of which pages not to include in the index. This method will remove pages from the index, but there will still be a crawl budget spent on them and link equity that is diluted.

Canonical tags allow you to instruct Google that a group of similar pages has a preferred version of the page.

The simplest solution is adding the “nofollow” tag to those internal links. It will prevent crawlers from following those links and attempting to crawl the content.

You do not have to physically delete those pages. However, blocking crawlers from accessing them will immediately reduce the number of crawlable URLs to free up crawl budget.

You can save much of the crawl budget from going to waste by simply blocking crawlers from accessing URLs that you don’t need to be indexed.

These could be pages with legal information, tags, content categories, or other assets that do not provide much value for searchers.

The simplest way to do so is by either adding the “noindex” tag to those assets or a canonical tag pointing to a page you want to index instead.

As we’ve discussed already, Google will prioritize URLs in the sitemap over those it discovers while crawling the site.

Unfortunately, though, without regular updates, the sitemap might end up clogged with inactive URLs or pages you don’t necessarily need to have indexed. Regular updates to the sitemap and cleaning up those unwanted URLs will free up crawl budget as well.

A robots.txt file tells search engine crawlers which pages or files the crawler can or can't request from your site. Typically, the file is used to prevent crawlers from overloading sites with requests, however, it can help steer Googlebot away from certain sections of the site and also free up the crawl budget.

One thing to remember, though, is that the robots.txt is only a suggestion to Googlebot - not a directive it has to unconditionally follow every single time.

Google has stated openly that improving site speed doesn't just provide a much better user experience, but also increases the crawl rate. Making pages load faster, therefore, is bound to improve the crawl budget usage.

Optimizing page speed is a vast topic, of course, and involves working on many technical SEO factors.

At seoClarity, we recommend that you at minimum enable compression, remove render-blocking JavaScript, leverage browser caching, and optimize images to ensure that Googlebot has enough time to visit and index all of your pages.

Search engine bots find content on a site in two ways. First, they consult the sitemap. But they also navigate the site by following internal links.

This means that if a certain page is well interlinked with other content, its chances of having the bot discover it are significantly higher. An asset with little or no internal links can, most likely, remain unnoticed by the bot.

But this also means that you can use internal links to direct crawlers to pages or content clusters that you absolutely need to have indexed.

For example, you can link those pages from content with many backlinks and high crawl frequency. By doing so, you’ll increase the chances that Googlebot will reach and index those pages quickly.

Optimizing the entire site’s architecture can also help with freeing up the crawl budget. Having a flat but wide architecture, meaning that the most critical pages are not too far from the homepage, will make it easy for Googlebot to reach those assets within the available crawl budget.

An important aspect of optimizing your crawl budget is monitoring how Googlebot visits your site and how it accesses the content.

There are three ways to do it, two of which lie within GSC:

Google Search Console includes a breadth of information about your site’s stance in the index and the search performance. It also provides certain insight into your crawl budget.

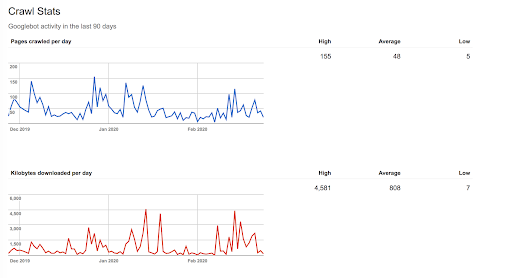

First of all, in the Legacy tools section, you’ll find the Crawl Stats report, showing Googlebot activity on your site within the last 90 days.

From the report you can see that, on average, Google crawls 48 pages a day on this site. Assuming that this rate remains consistent, you can calculate the average crawl budget for the site with this formula:

Average pages per day * 30 days = Crawl Budget

In this case, it looks like this:

48 pages per day * 30 days = 1440 pages per month.

Naturally, this is a crude estimate but it can give some insight into your available crawl budget.

Note: Optimizing the crawl budget using the tips above should increase the number.

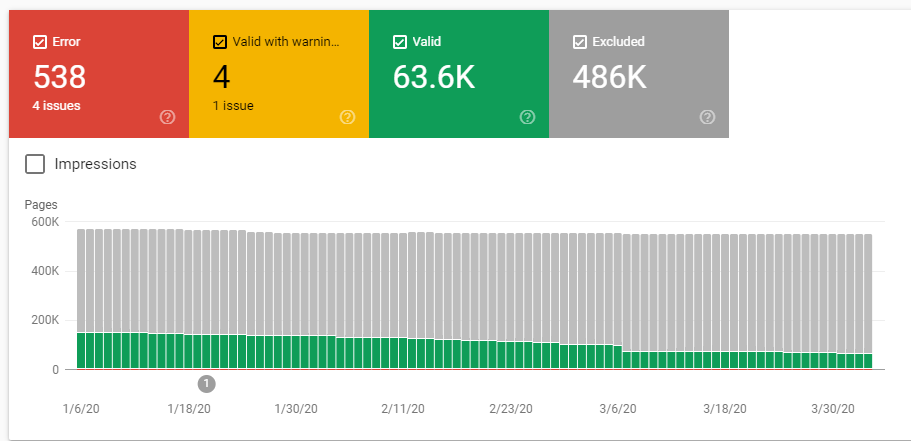

The Coverage Report in GSC will also show how many pages Google has indexed on the site and excluded from indexation. You can compare that number with the actual volume of content assets to identify if there are any pages the Googlebot has missed.

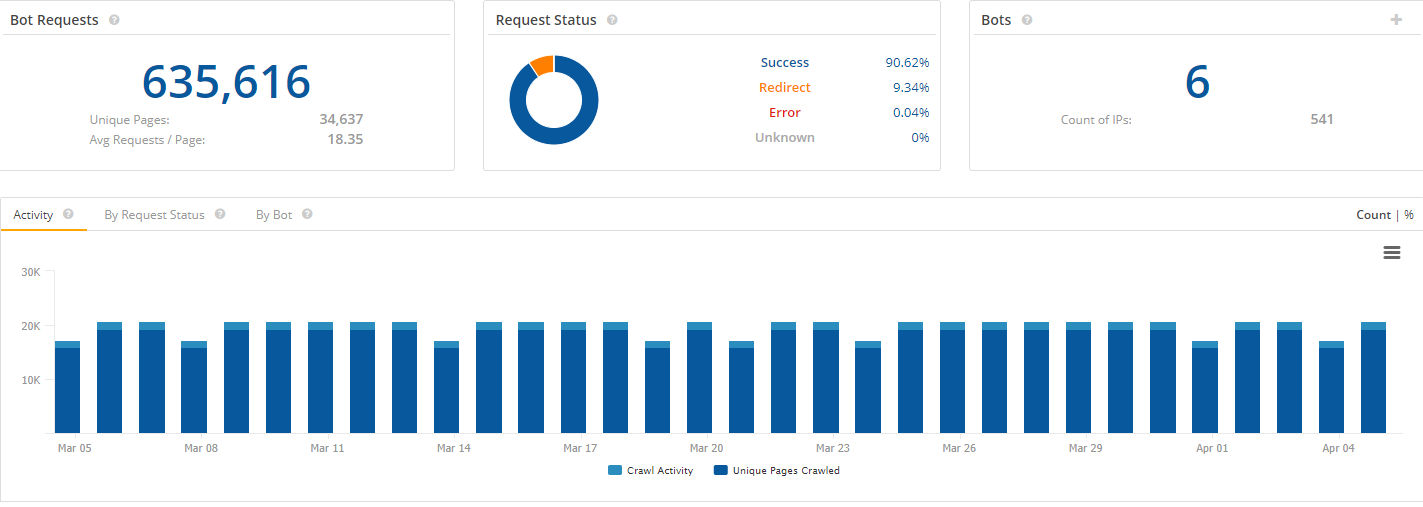

Without a doubt, the server log file is one of the biggest sources of truth about a site’s crawl budget.

This is because the server log file will tell you exactly when search engine bots are visiting your site. The file will also reveal what pages they visit the most often, and the size of those crawled files.

11.222.333.44 - - [11/Mar/2020:11:01:28 –0600] “GET https://www.seoclarity.net/blog/keyword-research HTTP/1.1” 200 182 “-” “Mozilla/5.0 Chrome/60.0.3112.113”

On Mar 11, 2020 someone using Google Chrome tried to load https://www.seoclarity.net/blog/keyword-research. The “200” means the server found the file, weighing in at 182 bytes.

Now, it's true that server log-file analysis is not a simple task. You have to go through thousands of rows of server requests to identify the right bot and analyze its activity.

At seoClarity, you have access to Bot Clarity, our site audit tool that, among other functionalities, provides a detailed log-file analysis.

With Bot Clarity, you can:

No, disallowed URLs do not affect crawl budget.

Unfortunately, no. But it can reduce the number of crawlable URLs and affect crawl budget positively.

It actually depends. Any URL the Googlebot crawls will affect crawl budget.

The nofollow directive on a URL does not restrict the crawler from accessing the page as if another page on your site, or any page on the web, doesn't label the link as nofollow.

No, the "crawl-delay" directive in the robots.txt is not processed by Googlebot.

Yes, they do. Any URL that the crawler accesses counts against the crawl budget. As a result, any alternate versions of the content, AMP, hreflang tag defined content, or content triggered by JS or CSS will affect the crawl budget.

Google's ability to access and crawl the site’s content impacts its ability to rank it for relevant phrases.

So, although crawling may not be a ranking factor per se, it is the first step to getting the search engine to discover the content, understand it, index, and rank.

Yes, and this has been confirmed by Google.

Probably not (or not much). You have to pick a canonical and have to crawl the dups to see that they're dups anyway.

You can adjust your crawl rate in the Google Search Console by using the Crawl Rate Settings option.