A few years ago, I visited a client to help them solve a nagging technical SEO issue.

But as we began working through the problem, I realized they had an even bigger challenge.

During the session, I noticed everyone going back to their Excel data for answers. As a result, I estimated back then, we must have wasted at least a third of our time just trying to find information.

That was a while ago.

But to my horror, I recently experienced an identical situation. This time, with another company.

What’s so shocking about it? Those SEOs still manage the list of URLs and corresponding issues manually. Today. In the days when software and artificial intelligence can take the burden off their backs.

But I think I understand why. It’s the inability to choose the right website crawler for their needs. With so many solutions on the market, how do you know which one can deliver the insights you must have.

So, in this post, I decided to outline the most critical features of an enterprise-level web crawler.

Let’s start at the top.

#1. Crawl Trend Data

A single website crawl can reveal incredible insights to help improve your search visibility.

Of course, you get even better results if you run site audits regularly.

But you notice the full benefit of having a crawler tool when you begin comparing crawls. Because only by doing so, you can track how your site is performing over time.

And so, a web crawler should offer the ability to do three things:

- Schedule regular reports.

- Crawl comparison data.

- Create a crawl schedule (i.e., weekly, monthly, etc.)

(Crawl error trend report in seoClarity)

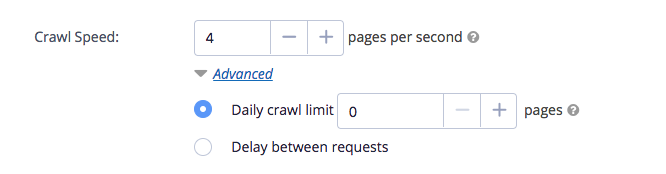

#2. Ability to Optimize Crawl Speed and Limit

The time it takes for a crawler to audit your site will depend on the crawl speed. But at first sight, this might seem like something you have no control over.

Not true.

Advanced web crawlers allow you to set up the crawl speed and influence the number of pages they’ll retrieve per second.

The option becomes helpful when you run too fast crawls that might overload the server.

Similarly, to extract more precise insights, you should be able to specify the crawl limit too.

This feature is particularly useful for enterprise sites. It allows you to restrict a crawl to access a thousand pages per day. And in doing so, prevent it from using up available server resources.

Another reason to restrict the crawl is to prevent a crawler from accessing pages it doesn’t actually have to crawl. Restricting its access ensures you are not wasting time crawling URLs that are not important to you.

Finally, our crawler allows you to pause the crawl if you notice it is affecting your site negatively.

Here’s how these controls looks like in seoClarity:

Note that the crawler lets me specify the speed and define the crawl limit or a delay between audits.

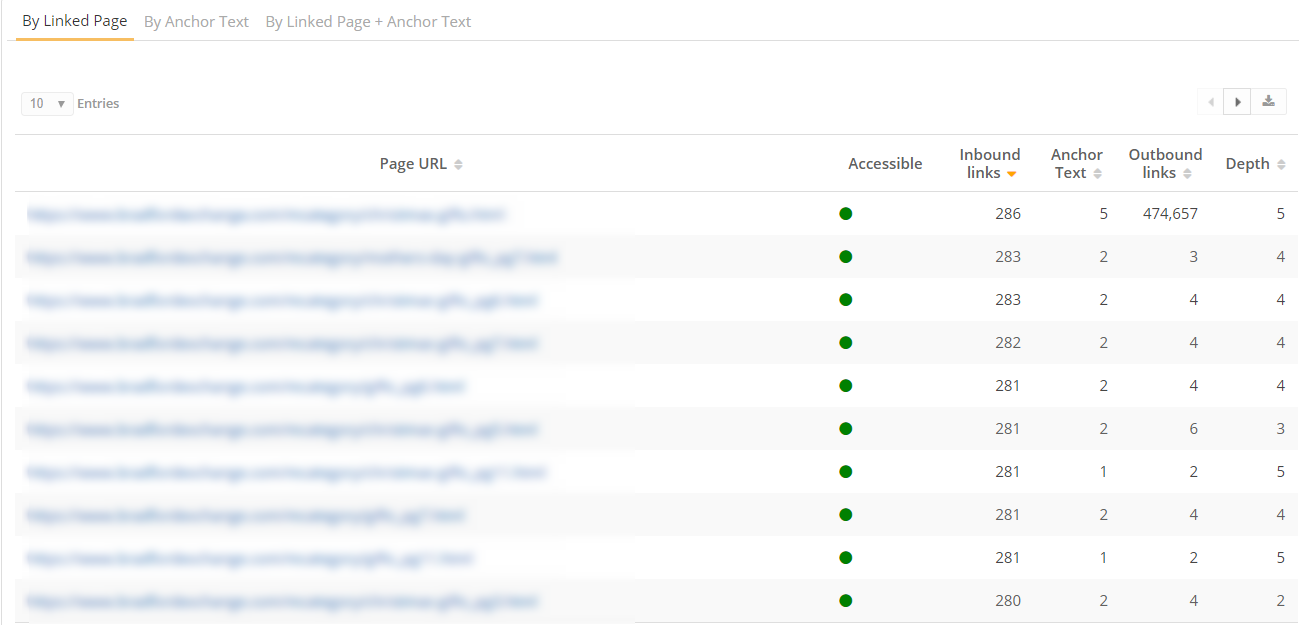

#3. Assessing Interlinking Issues

The benefits of having a strong internal link structure are irrefutable.

Search engine bots use internal links to discover all your URLs. What’s more, a clear site architecture helps users find the information they need faster too.

But internal linking can easily go wrong. As the site matures and grows, many of the internal links break. For one, you may have changed some URLs. Or even removed those pages entirely. The result is a confusing architecture with many dead ends.

A solid crawler will allow you to monitor and audit internal links too. It’ll report on broken links or unnecessary redirects. It’ll help identify pages with too many links. And suggest the ones to interlink more as well.

.png?width=1218&name=Internal%20Links%20Analysis%20(1).png)

(Interlinking reports in seoClarity)

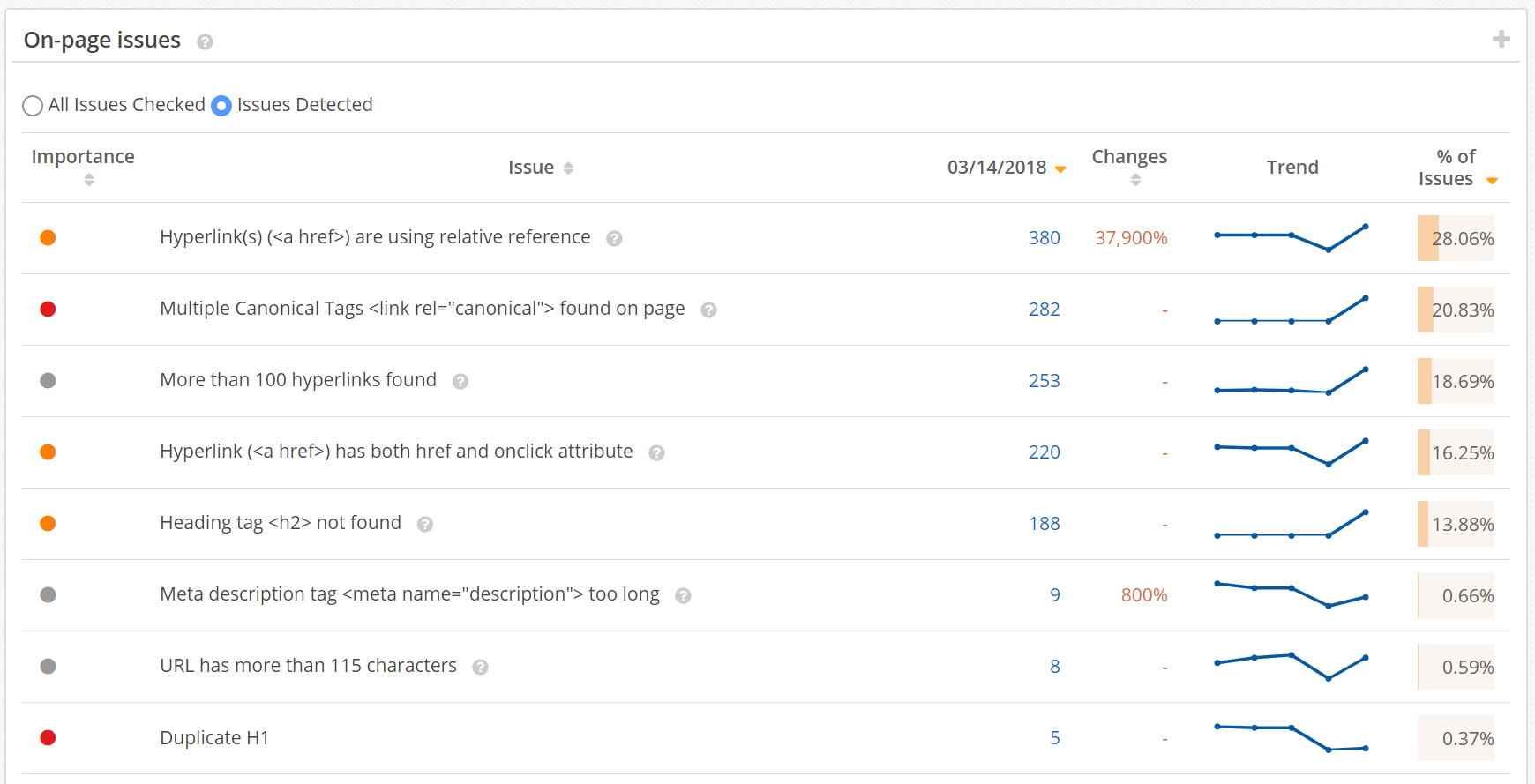

#4. Reporting on SEO Issues that Affect Search Performance

To achieve its full potential in search, each page you want to rank must meet certain criteria.

It needs to have an original meta-title tag, including the target keywords. You must write an engaging meta-description too and add the keyword to the H1 heading. Similarly, you must optimize the page’s URL, add relevant content, and so on.

But let’s face it, with thousands of pages to manage, it’s easy to overlook some of those issues.

And so, you need a crawler that can identify them for you.

seoClarity Site Audit technology, for example, analyzes your pages for 40+ technical health checks. And then, let’s you know exactly what you should fix on each of them.

(Some of the issues Clarity Audit reports on.)

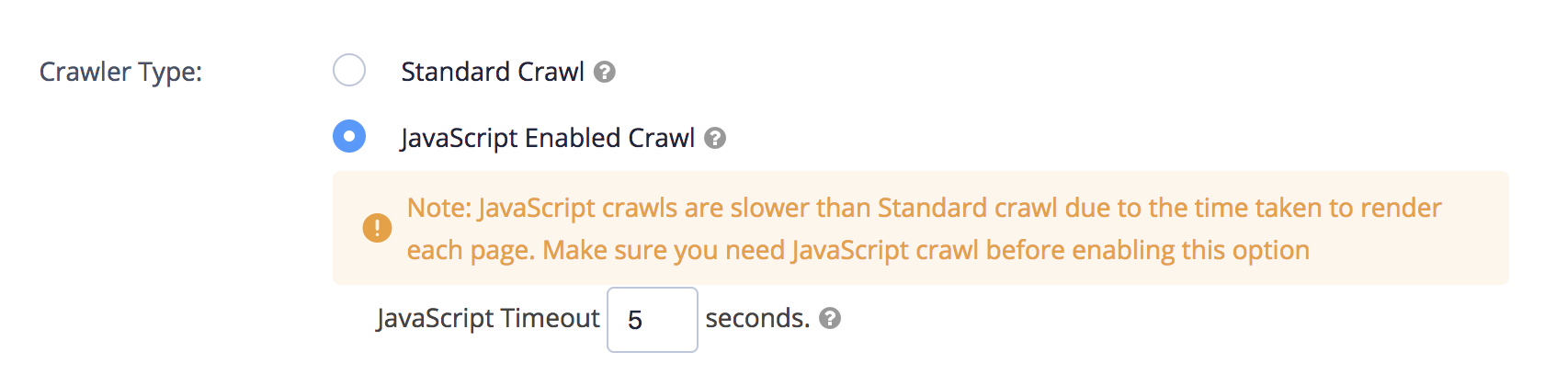

#5. JavaScript Crawling

With so many scripts powering the web today, it’s also crucial that you analyze pages exactly as a search engine would.

For example, more and more websites rely on JavaScript to display content. As a result, search engines have improved their page rendering.

For you, this means that the crawler you use will have to crawl JS files too. After all, you must be able to tell whether Google can crawl and index pages rendered with JS correctly.

With our crawler, you can assess what JS-related issues Google may encounter with its own JS crawler.

(JavaScript crawl setup in seoClarity)

In fact, in this feature, you can:

- Find out if content and links modified by JavaScript are rendered correctly for search engines to index.

- Find out how crawlable your pages are with and without JavaScript rendering enabled.

- Detect JavaScript URL changes including redirects, meta redirects, JS location.

- Cache resources found in a crawl so that your server is not overloaded.

#6. Hreflang Audit

Hreflang tag lets you tell a search engine which of your sites is most relevant to a user based on their language and location. With this information, the search engine can serve it to users searching for information in this language.

Your crawler must be able to capture hreflang annotations on each page and then, verify if they’re correct and contain no errors.

In one of our tests, we discovered how sites without the hreflang tag implemented had lower rankings and poorer search visibility.

We also noticed Google listing product pages in wrong currency for US customers. This, most likely, led to poor user experience and higher than usual bounce rate on those pages.

Some of the hreflang-associated issues include:

- Incorrect ISO code in the hreflang annotation

- Absence of a return key tag or self-reference

- Hreflang points to relative URLs

- Combining hreflang sitemaps and page tagging methods

- Missing or incorrect x-default

- Addition of hreflang tags to no-index pages

- Using the country code on its own without the required language code

#7. Pagination Audit

SEO pagination helps improve the user experience. It makes browsing long product category easier. It also simplifies reading long-form articles. And these are just some of its benefits.

But paginating pages can sometimes go wrong. For example, you could have a rel=canonical tag pointing to the first page in the series from any subsequent pages.

Using rel=canonical in this instance would result in the content on pages 2 and beyond not being indexed at all.

seoClarity allows conducting a dedicated pagination crawl and validate URLs discovered with the rel=prev/next directive.

(Pagination crawl setup in seoClarity)

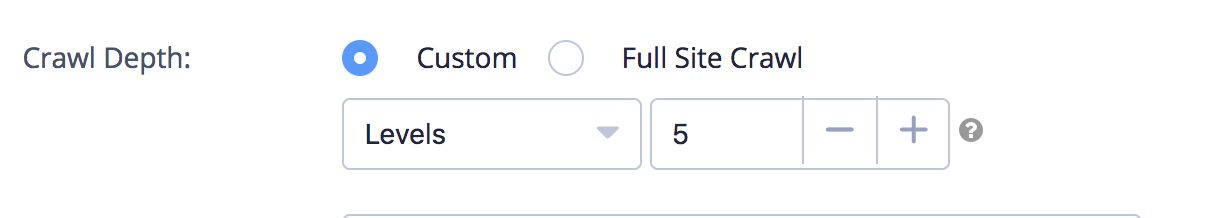

#8. Crawl Depth

You don’t always need to crawl the entire site.

You may want to analyze only page on a specific level. Or assess only pages associated with a specific category

But for that, you need to be able to control the crawl depth.

With this functionality, you specify how deep into the site a crawler should go.

For example, selecting 1 will analyze only the links found on the starting URL. Specifying depth one level higher would tell a crawler to also audit links found on Level 1 and so on.

Similarly, you could also set the crawler to access only the URLs in the sitemap or even specify what to crawl manually.

(Crawl depth setup in seoClarity)

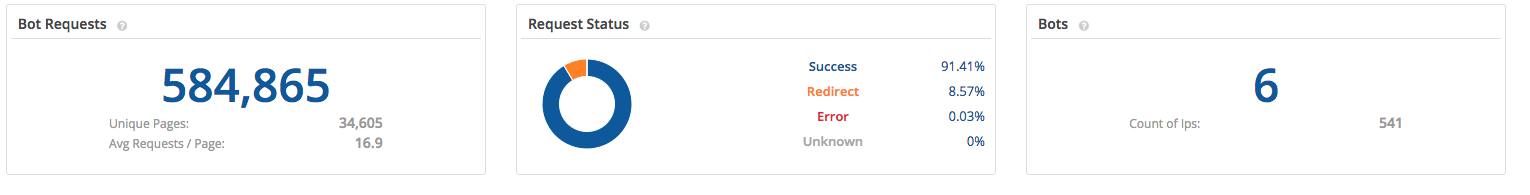

#9. Bot Activity

You can base so many decisions on the bot activity alone, and rightly so. Server log analysis can reveal many potential issues with the site, and discover the correlation between bots, rankings, and the data.

But your actions have a detrimental effect if you base them on a spoof bot activity.

That’s why, as part of its site audits, your crawler should also analyze server logs, if only to weed out spoof bots from the report.

Bot Clarity, for example, let’s you identify errors and issues with search engine crawls. Among other things, the Bot Clarity reports let you identify the most in-demand pages, couple that with the crawl rate, and as a result, optimize your crawl equity.

#10. Clear Dashboards to Let You Act on the Data Right Away

A solid web crawler is capable of delivering an incredible amount of information. But there’s a catch.

To act on it, you need to access that information fast. Otherwise, you might be back to square one, having to sift through countless data to extract the insights you seek.

That’s where clear, customizable dashboards come in handy.

Clarity Audit, for example, displays all issues in a single dashboard. And by doing so, allows you to start acting on the insights right away, instead of having to waste time accessing and analyzing it.

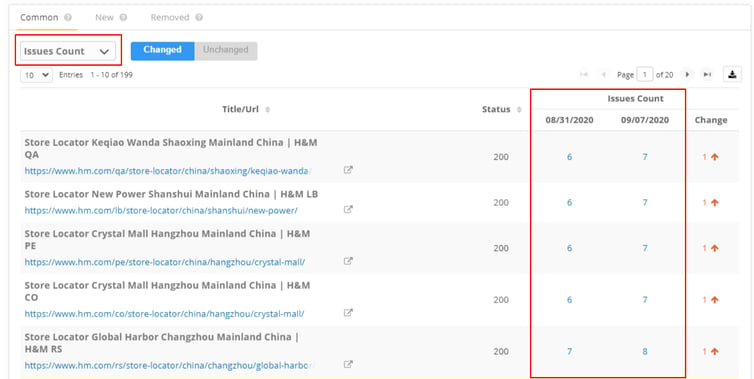

#11. Crawl Comparisons

If you manage an enterprise-level site, you most likely run a lot of crawls. To make it simple to monitor the crawl results, your tool should allow you to compare your crawl reports side-by-side.

Doing this allows you to confirm that your issues count is decreasing after you apply the correct optimizations. It can also confirm that editorial elements like your title tag and meta description went live with no errors.

Altogether, a crawl comparison report lets you guarantee that the work you do is in fact making a difference and is landing on the website okay.

(The count of issues detected for two crawls side-by-side.)

Conclusion

For an enterprise site, being able to conduct advanced crawls is beyond a necessity. Only by having a complete view of the site from a technical standpoint, you can identify issues potentially lost among the thousands of your pages.

And for that, you need an advanced web crawler, capable of analyzing issues on enterprise-level sites.

Editor's Note: This post was originally posted in October 2018 and has been updated for accuracy and comprehensiveness.

Comments

Currently, there are no comments. Be the first to post one!