No matter the size of your site, you’ll never stop making changes to it. It will never be “done.” You can (and should) monitor your site changes and overall site health by consistently crawling your site.

The challenge is, as your site grows, comparing these changes and progress becomes more difficult.

For enterprise sites, analyzing the crawl data by comparing what has changed between two crawls using Excel is simply too tedious.

Plus, with multiple teams making site changes, you need to have a centralized location so that everyone works from the same source of data.

If you’re a part of a smaller team, you most likely don’t have the time or resources to do this process manually.

The ability to compare crawl data allows you to analyze crawl trends over time in a side-by-side view, all in an effort to scale your approach to technical SEO.

Recommended Reading: A Guide to Crawling Enterprise Sites Successfully

Surveying Site Health with Crawl Comparisons

For the following demonstrations, I’ll be using data collected with seoClarity’s built-in crawler.

#1. Monitor Page Depth

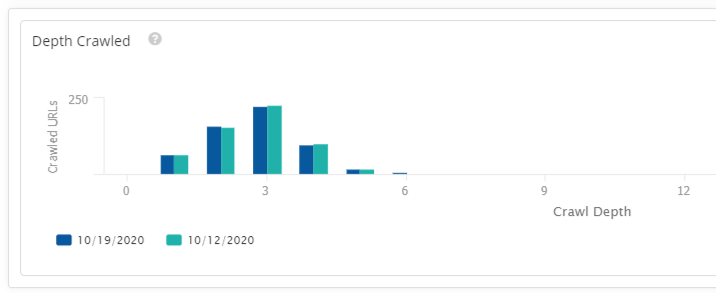

Use the crawl comparison feature to compare the crawl depth between two crawls.

This helps identify where your pages are being found by the crawler. Since our crawler works just like Google’s, you would want your most important pages to be easily accessible.

If you see a drastic change, (i.e. pages are being pushed further down) that would be a cause to look into the changes in depth. If you changed something in your site taxonomy to make certain pages more accessible, this view also allows you to confirm that.

#2. Check for New and Removed Pages Between Crawls

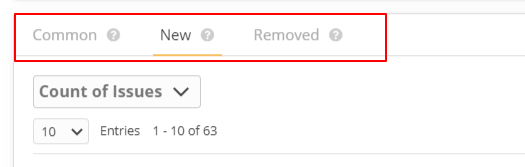

Keep track of new pages (i.e. new pages found in the recent crawl) and removed pages (i.e. pages not found in the recent crawl that were found in the precious crawl); plus see all the SEO issues associated with these pages.

Knowing the count of new and removed pages (and their respective count of issues) shows you if there are any glaring issues with your site.

New pages aren't indexed yet, but you care about them. (There's a reason you published them on your site!) You want to make sure there are no technical SEO issues that can hold them back from performing.

Removed pages may have been intentionally deprecated, or could be because a page is orphaned. An orphan page is a page on your site that no other page links to.

They are inaccessible to crawlers because crawlers rely on the internal linking structure of a website to navigate and gather information.

#3. View Editorial Content Changes

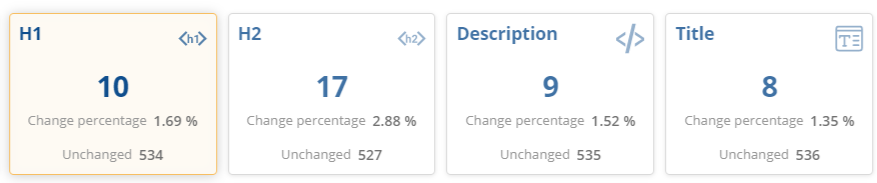

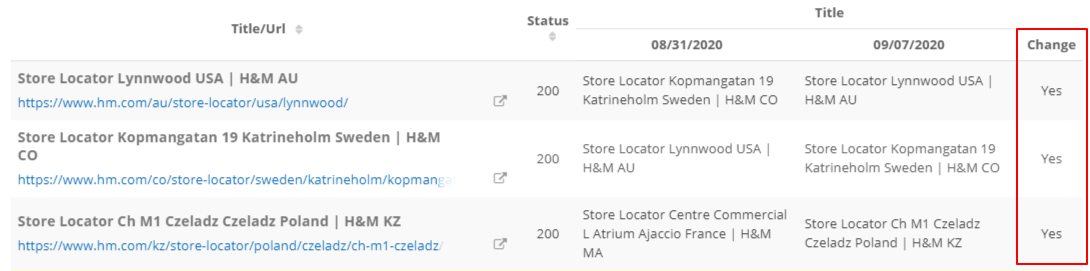

See the change in on-page content factors like H1, H2, description, title, and word count.

Confirm that your editorial changes have taken effect and are reflecting correctly on your site. Conversely, catch editorial mistakes — at scale — and assign changes out to designated team members. With enterprise sites, many individuals may have access to the CMS, so mistakes can be common.

#4. Track Changes in Number of Redirects

Use the crawl compare feature to track changes in the number of redirects.

An increase in the number of redirects for a site may be a cause for concern if it is unexpected.

There are situations where redirects are necessary — such as handling URLs with and without the trailing slash, or in cases where you have a site migration and you want to ensure that the old pages now redirect to the new.

However, comparing and keeping track of an unexpected increase or decrease in the number of redirects helps catch and audit any mistakes in the redirection setup.

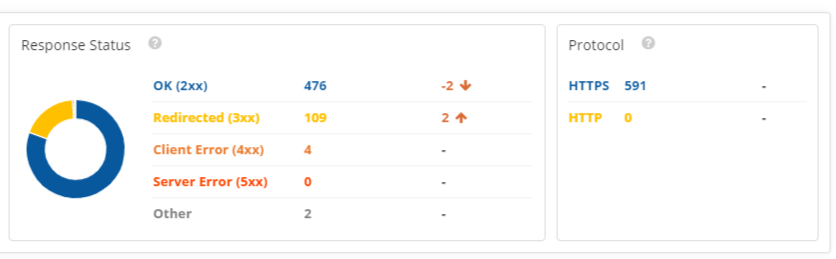

#5. Review Changes in Protocol or Response Status

Monitor accessibility changes to your site.

It’s important to track changes in 2xx pages or increases in error pages (4xx or 5xx) to ensure the accessibility of your site is maintained and search engines and users are able to access your site. Protocol changes should also be monitored for usability purposes.

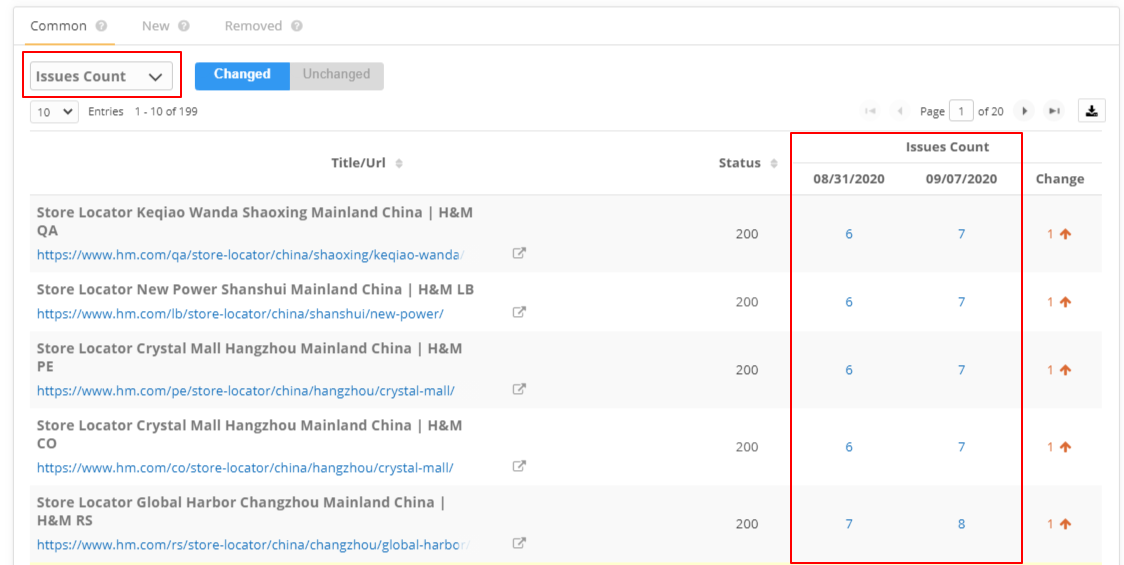

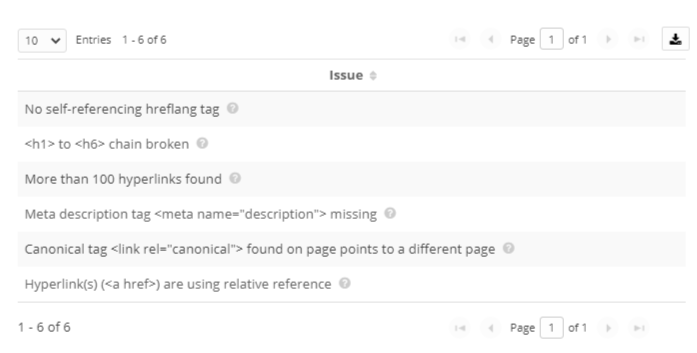

#6. See a Summary of Number of Issues Detected

Locate pages that have an increase or decrease in technical issues, or see pages with no change to the count of issues between two crawl dates.

Know that you are fixing issues on pages when the issues per URL go down. Conversely, know if a page has generated new issues that need attention. For issues that are unchanged, this may help you prioritize page optimizations since these pages have received no changes between the two crawls.

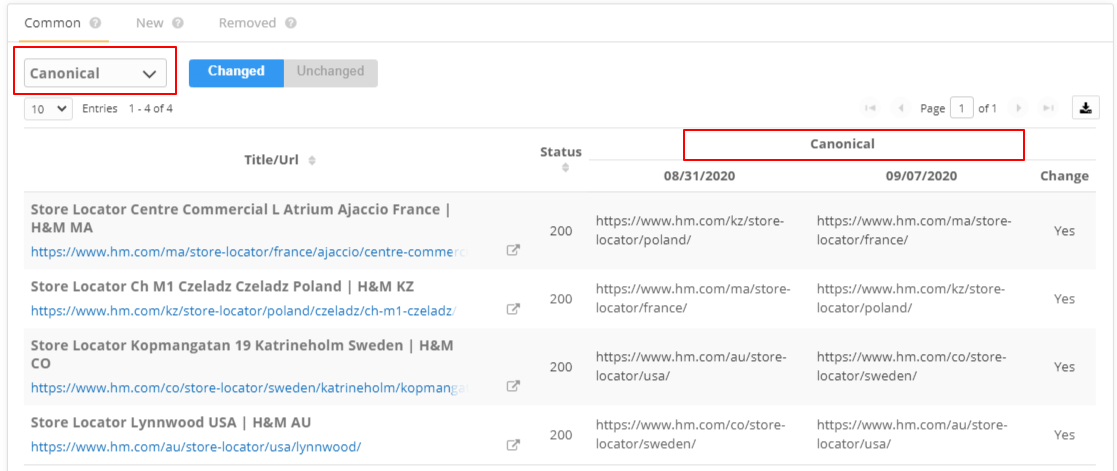

#7. Confirm Canonical Tags

See the changes in your implementation of the canonical tag.

Canonicalization is important to ensure that the search engine indexes the correct page. If the wrong page is canonicalized or your canonicals are not set up correctly, you could be penalized. For example, if your site has a migration that has completed and you are comparing crawls pre- and post-migration, this can help you confirm that the canonicals have been set up correctly.

#8. Changes in Robots Header

Robots header offer parameter guidelines to Googlebot on which pages to crawl.

Note: The above screenshot reflects no changes to the robots header between the compared crawls.

Since the robots header controls indexing of a page, it is important to monitor changes to the Robots header. Comparing crawls and monitoring for changes in the robots header is a quick way to ensure that there is no unwanted and unexpected deindexing of pages from your site.

Next Steps

As you’ve seen, there are a number of scenarios where it benefits you to know what’s changed on your site over time.

It’s recommended to check these changes on a monthly or bi-weekly basis. To help automate the process, you can set up recurring crawls — this being just one example of how an SEO platform allows you to automate your SEO.

Check these crawl comparisons to guarantee that everything falls in line with the expectations of the work being done on the site.

Note: If you plan to migrate your site, a crawl comparison pre- and post-migration is a necessity!

1 Comment

Click here to read/write comments