Googlebot is always on the move, constantly scanning the web to discover and update information. But just because it's automated doesn't mean it operates on your schedule.

If you've made critical updates—whether fixing outdated content, optimizing for SEO, or recovering from technical issues—you don’t want to wait around for Google to notice.

The good news? You don’t have to. Instead of hoping Googlebot stops by your site soon, you can take proactive steps to request a recrawl and speed up the indexing process.

The Importance of Having Google Recrawl Your Site for SEO

There are several reasons why you might want to request a Google recrawl.

Maybe you just created a new post that you want Google to pick up or made a few updates to strengthen the content and the overall topic cluster.

Or, maybe …

You really need Google to recrawl your site because your colleague who doesn’t know SEO made a critical site change that can tank your rankings or traffic, but thanks to your page change monitoring system you caught the change early and reverted it.

So you really need Googlebot to pick up your correction!

Phew. We hope you’re not in that situation, but if you are, don’t worry.

You’ve already caught the change with a solution like Content Guard. Now it’s time to bring Google back to your site.

Here’s how to do it.

Note: While you can request a recrawl, there is no guarantee that this will accelerate the process.

How to Request a Recrawl from Google

There are two ways to get Google to recrawl your site or URL.

One is Google Search Console’s URL Inspection tool, and the other is submitting a sitemap to Search Console. We'll walk you through both options below.

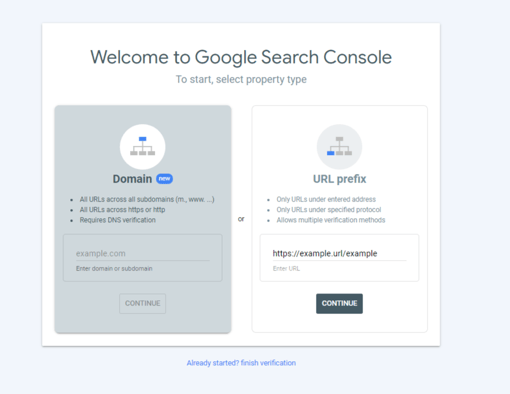

#1. URL Inspection Tool

Google Search Console’s URL Inspection tool lets you request reindexing, but there’s a catch—it only works for one page at a time.

For enterprise sites managing thousands (or millions) of pages, this method isn’t scalable. However, if you’ve identified a critical issue on a single URL—such as reverting unintended changes or updating key content—this tool can be an effective way to get Google to take notice.

Here’s Google’s quick two-step process:

1. Inspect the page URL

Enter your URL under the “URL Prefix” portion of the inspect tool.

2. Request reindexing

After the URL has been tested for indexing errors, it gets added to Google’s indexing queue.

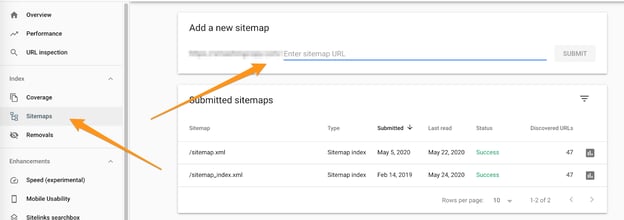

#2. Submit a Sitemap to Search Console

Approach number two allows you to request pages be reindexed in bulk. And we all know that scale is an enterprise SEO’s best friend!

Most content management systems like WordPress will allow you to create a sitemap.

If that’s not an option, you can use your crawler to create the sitemap. The crawler will collect information about the page and store it in a database.

Then, that data can be outputted into XML format for the sitemap.

Recommended Reading: How to Create a Sitemap and Submit to Google

seoClarity clients can use our built-in SEO website crawler for this. It’s an enterprise crawler that mimics how Googlebot would crawl your site.

Use it to create a sitemap of the entire site or create individual sitemaps of specific sections.

Once you’ve created the sitemap, it’s time to submit it to Google.

How to Submit Your Sitemap to Google

Upload your sitemap’s XML file to your server’s root directory. This will result in a URL similar to https://example.com/sitemap-index.xml.

Then you can submit your sitemap via Google Search Console.

How Long Does it Take for Google to Recrawl a Page?

Google crawls each site on a different timeline. The recurrence of a crawl usually depends on the content’s quality, the site’s authority, and the rate of new content creation.

It also depends on whether there are other underlying crawl issues like robots.txt blocks or broken internal links.

For example, a frequently updated site like CNN will be crawled much more often than a blog site that only creates new content every few weeks.

Here’s a helpful note from Google:

Requesting a crawl does not guarantee that inclusion in search results will happen instantly or even at all. Our systems prioritize the fast inclusion of high quality, useful content.”

This is your friendly reminder to always create high-quality, authoritative content that has your target audience in mind.

Content Fusion, our AI-powered content optimizer, can help you with that. It ensures your content meets Google’s quality standards while aligning with audience expectations. By integrating business insights, competitive data, and AI-driven recommendations, it helps you:

✅ Gain Actionable Insights – Identify key audience demographics and content opportunities.

✅ Tailor Your Content – Understand exactly what your audience is searching for.

✅ Accelerate Content Creation – Generate outlines, briefs, blogs, FAQs, and more in seconds.

✅ Collaborate with AI – Chat with SIA, your AI assistant, to refine strategies, uncover content gaps, and optimize performance.

The stronger and more relevant your content, the more likely Google will prioritize recrawling and indexing it. Take control of your content strategy today with Content Fusion. Sign up for a demo and get a two-week free trial!

How to Know When Googlebot Hits Your Site

Once you’ve used the URL inspection tool or submitted an updated sitemap, you can monitor when Googlebot returns to your site.

It’s not a required step, but you may breathe easier knowing that Google has recognized your appropriate change.

There are a few ways to do this:

- URL Inspection tool

- Index status report

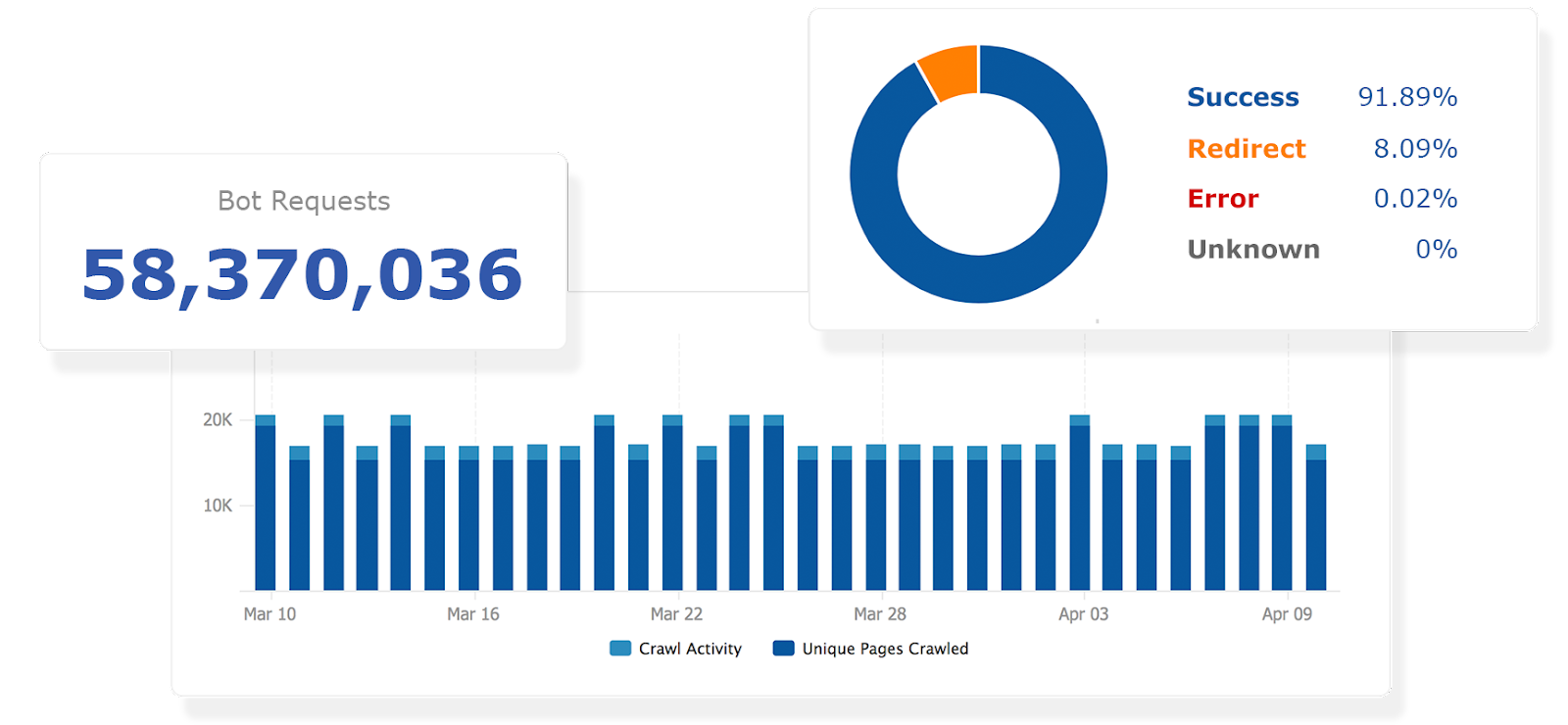

- seoClarity’s Bot Clarity

URL Inspection Tool

We’re back to the Inspection tool for this route. You can request specific information about your URLs, including information about the currently-indexed version of the page.

This will show you the rendered view of the page, or how Google sees the page.

After running the live URL test, the tool will show you a screenshot of the rendered page. Once the test is complete, you can view the page and take a screenshot.

Index Status Report

This report won’t show the current version of your page, but it will tell you which of your pages have been indexed by Google.

This is crucial to know, especially if someone on your team made a technical change that caused the page to become deindexed.

Google has put together an in-depth guide to the Index Status report.

Bot Clarity

seoClarity clients have access to advanced server log-file analysis.

Bot Clarity not only allows you to review bot crawl frequency (so you’ll know when Googlebot pays you a visit), you can also view the most and least crawled URLs and site sections.

This is a great way to understand and optimize your crawl budget.

Plus, you can use the log-files to find issues with search engine crawls before your rankings are affected.

Recommended Reading: How to Find SEO Insights From Log File Analysis

Conclusion

Unknown site changes can damage any SEO program, but luckily you’ve caught them all with an SEO page monitoring tool.

Now, all that’s left to do is revert the change and submit the URL to Google for a recrawl.

If you still don’t have a page change monitoring system, we’ve put together a list of some of the best: 8 Best SEO Tools to Monitor Page Changes.

<<Editor's Note: This post was originally published in September 2022 and has since been updated.>>

Comments

Currently, there are no comments. Be the first to post one!