seoClarity has been executing site audits for many years, and sometimes our clients tell us how frustrating it can be to come across the same common technical SEO issues over and over again …

This post outlines the most common technical SEO problems that seoClarity has encountered while doing hundreds of site audits over the years. Hopefully, our solutions help you when you come across these SEO problems on your site.

Here are the top SEO issues that we'll cover in this post:

- No HTTPS Security

- Site Isn’t Indexed Correctly

- No XML Sitemaps

- Missing or Incorrect Robots.txt

- Meta Robots NOINDEX Set

- Slow Page Speed

- Multiple Versions of the Homepage

- Incorrect Rel=Canonical

- Duplicate Content

- Missing Alt Tags

- Broken Links

- Not Enough Use of Structured Data

- Mobile Device Optimization

- Missing or Non-Optimized Meta Descriptions

- Users Sent to Pages with Wrong Language

What Is Technical SEO?

When we talk about technical SEO, we’re referring to updates to a website and/or server that you have immediate control over and which have a direct (or sometimes indirect) impact on your web pages' crawlability, indexation, and ultimately, search rankings.

This includes components like page titles, title tags, HTTP header responses, XML sitemaps, 301 redirects, and metadata.

Technical SEO does not include analytics, keyword research, backlink profile development, or social media strategies.

In our Search Experience Optimization framework, technical SEO is the first step in creating a better search experience.

Other SEO projects should come after you've guaranteed your site has proper usability. But for an enterprise site, it can be hard to stay on top of potential SEO problems.

These common technical SEO issues are often overlooked, yet are straightforward to fix and crucial to boost your search visibility and SEO success.

1. No HTTPS Security

Site security with HTTPS is more important than ever.

If your site is not secure, when you type your domain name into Google Chrome, it will display a gray background — or even worse, a red background with a “not secure” warning.

This could cause users to immediately navigate away from your site back to the SERP.

The first step for this quick fix is to check if your site is HTTPS. To do this, simply type your domain name into Google Chrome. If you see the “secure” message (pictured below), your site is secure.

How to Fix It:

- To convert your site to HTTPS, you need an SSL certificate from a Certificate Authority.

- Once you purchase and install your certificate, your site will be secure.

2. Site Isn’t Indexed Correctly

When you search for your brand name in Google, does your website show up in the search results? If the answer is no, there might be an issue with your indexation.

As far as Google is concerned, if your pages aren’t indexed, they don’t exist — and they certainly won’t be found on the search engines.

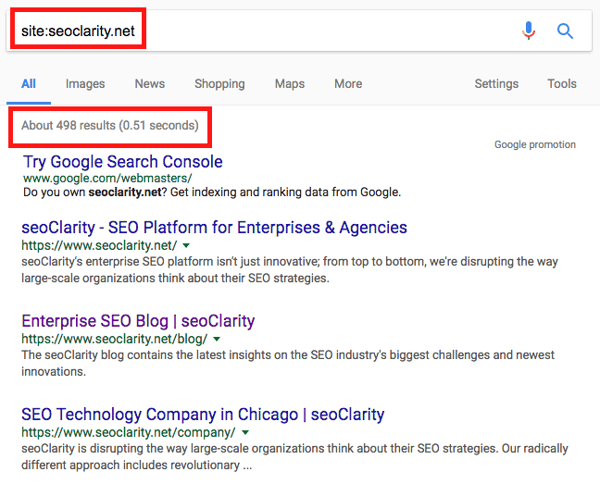

How to Check:

- Type the following into Google’s search bar: “site:yoursitename.com” and instantly view the count of indexed pages for your site.

How to Fix It:

- If your site isn’t indexed at all, you can begin by adding your URL to Google.

- If your site is indexed, but there are many MORE results than expected, look deeper for either site-hacking spam or old versions of the site that are indexed instead of appropriate redirects in place to point to your updated site.

- If your site is indexed, but you see quite a bit LESS than expected, perform an audit of the indexed content and compare it against which pages you want to rank. If you’re not sure why the content isn’t ranking, check Google’s Webmaster Guidelines to ensure your site content is compliant.

- If the results are different than you expected in any way, verify that your important website pages are not blocked by your robots.txt file (see #4 on this list). You should also verify you haven’t mistakenly implemented a NOINDEX meta tag (see #5 on this list).

3. No XML Sitemaps

XML sitemaps help Google search bots understand more about your site pages, so they can effectively and intelligently crawl your site.

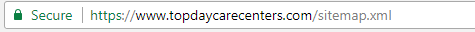

How to Check:

Type your domain name into Google and add “/sitemap.xml” to the end, as pictured below.

This is usually where the sitemap lives. If your website has a sitemap, you will see something like this:

How to Fix It:

- If your website doesn’t have a sitemap (and you end up on a 404 page), you can create one yourself or hire a web developer to create one for you. The easiest option is to use an XML sitemap generating tool. If you have a WordPress site, the Yoast SEO plugin can automatically generate XML sitemaps for you.

4. Missing or Incorrect Robots.txt

A missing robots.txt file is a big red flag — but did you also know that an improperly configured robots.txt file destroys your organic site traffic?

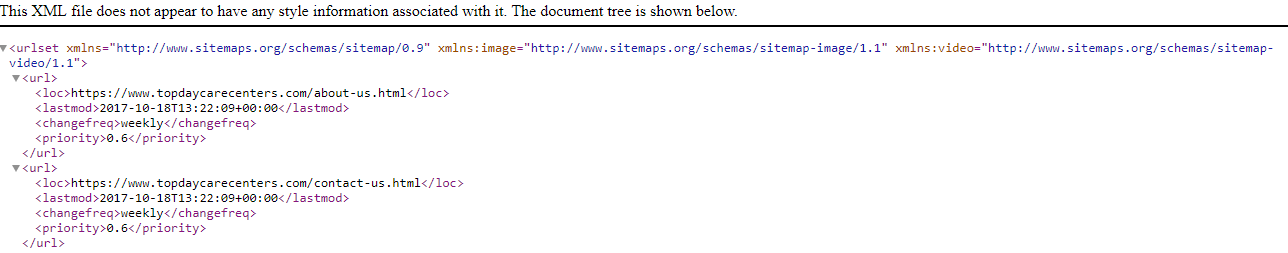

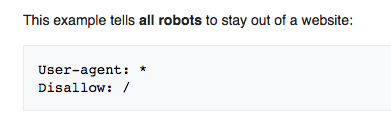

How to Check:

To determine if there are issues with the robots.txt file, type your website URL into your browser with a “/robots.txt” suffix. If you get a result that reads "User-agent: * Disallow: /" then you have an issue.

(Photo credit for robots.txt bad file.)

(Photo credit for robots.txt bad file.)

How to Fix It:

- If you see “Disallow: /”, immediately talk to your developer. There could be a good reason it’s set up that way, or it may be an oversight.

- If you have a complex robots.txt file, like many ecommerce sites do, you should review it line-by-line with your developer to make sure it’s correct.

5. Meta Robots NOINDEX Set

When the NOINDEX tag is appropriately configured, it signifies certain pages are of lesser importance to search bots. (For example, blog categories with multiple pages.)

However, when configured incorrectly, NOINDEX can immensely damage your search visibility by removing all pages with a specific configuration from Google’s index. This is a massive SEO issue.

It’s common to NOINDEX large numbers of pages while the website is in development, but once the website goes live, it’s imperative to remove the NOINDEX tag.

Do not blindly trust that it was removed, as the results will destroy your site's search visibility.

How to Check:

- Right click on your site’s main pages and select "View Page Source." Use the "Find" command (Ctrl + F) to search for lines in the source code that read “NOINDEX” or “NOFOLLOW” such as:

- <meta name="robots" content="NOINDEX, NOFOLLOW">

- If you don’t want to spot check, use Site Audits technology to scan your entire site.

How to Fix It:

- If you see any “NOINDEX” or “NOFOLLOW” in your source code, check with your web developer as they may have included it for specific reasons.

- If there’s no known reason, have your developer change it to read <meta name="robots" content=" INDEX, FOLLOW"> or remove the tag altogether.

Recommended Reading: The Best SEO Checklist to Boost Search Visibility and Rankings

6. Slow Page Speed

If your site doesn’t load quickly (typically 3 seconds or less), your users will go elsewhere.

Page speed matters to the user experience — and to Google's algorithm. In summer of 2021, Google announced that the page experience update (which includes metrics from Core Web Vitals) rolled out and updated a new Page Experience report in Search Console.

How to Check:

- Use Google PageSpeed Insights to detect specific speed problems with your site. (Be sure to check desktop as well as mobile performance.)

- If you don’t want to spot check, use seoClarity's Page Speed to pull in scores monthly or bi-weekly to monitor and identify page speed issues across your site.

How to Fix It:

- The solution to slow page load can vary from simple to complex. Common solutions to increase page speed include image optimization/compression, browser caching improvement, server response time improvement, and JavaScript minifying.

- Speak with your web developer to ensure the best possible solution for your site's particular page speed issues.

7. Multiple Versions of the Homepage

Remember when you discovered “yourwebsite.com” and “www.yourwebsite.com” go to the same place? While this is convenient, it also means Google may be indexing multiple URL versions, which dilutes your site's visibility in search.

Even worse, multiple versions of a live page may confuse users and Google's indexing algorithm. As a result, your site might not get indexed properly.

How to Fix It:

- First, check if different versions of your URL successfully flow to one standard URL. This can include HTTPS and HTTP versions, as well as versions like “www.yourwebsite.com/home.html.” Check each possible combination. Another way is to use your “site:yoursitename.com” to determine which pages are indexed and if they stem from multiple URL versions.

- If you discover multiple indexed versions, you’ll need to set up 301 redirects or have your developer set them up for you. You should also set your canonical domain in Google Search Console.

8. Incorrect Rel=Canonical

Rel=canonical is particularly important for all sites with duplicate or very similar content (especially ecommerce sites). Dynamically rendered pages (like a category page of blog posts or products) can look duplicative to Google search bots.

The rel=canonical tag tells search engines which “original” page is of primary importance (hence: canonical) — similar to URL canonicalization.

How to Fix It:

- This one also requires you to spot check your source code. Fixes vary depending on your content structure and web platform. (Here’s Google’s Guide to Rel=Canonical.) If you need assistance, reach out to your web developer.

9. Duplicate Content

With more and more brands using dynamically created websites, content management systems, and practicing global SEO, the problem of duplicate content plagues many websites.

It may “confuse” search engine crawlers and prevent the correct content from being served to your target audience.

Unlike content issues like too little or “thin” content where you don’t have enough content on a page (at least 300 words), duplicate content can occur for many reasons:

-

Ecommerce site store items appear on multiple versions of the same URL.

-

Printer-only web pages repeat content on the main page.

-

The same content appears in multiple languages on an international site.

How to Fix It:

Each of these three issues can be resolved respectively with:

-

Proper rel=canonical (as noted above).

-

Proper configuration (instructions for setup also noted above).

-

Correct implementation of hreflang tags.

Google’s support page offers other ideas to help limit duplicate content including using 301 redirects, top-level domains, and limiting boilerplate content.

10. Missing Alt Tags

Broken images and those missing alt tags are a missed SEO opportunity. The image alt tag attribute helps search engines index a page by telling the bot what the image is about.

It’s a simple way to boost the SEO value of your page via the image content that enhances your site's experience.

How to Fix It:

Most SEO site audits will identify images that are broken and missing alt tags. Running regular site audits to monitor your image content as part of your SEO standard operating procedures makes it easier to manage and stay current with image alt tags across your website.

11. Broken Links

Good internal and external links show both users and search crawlers that you have high quality content. Over time, content changes and once-great links break.

Broken links interrupt the searcher's journey and reflect lower quality content, a factor that can affect page ranking.

How to Fix It:

While internal links should be confirmed every time a page is removed, changed, or a redirect is implemented, the value of external links requires regular monitoring. The best and most scalable way to address broken links is to run regular site audits.

An internal link analysis will help digital marketers and SEOs find the pages where these links exists so they can fix them by replacing the broken link with the correct/new page.

Use our backlinks feature to find all external links that are broken. From there, you can reach out to the sites with broken links and provide them with the correct link or new page.

12. Not Enough Use of Structured Data

Google defines structured data as:

… a standardized format for providing information about a page and classifying the page content …

Structured data is a simple way to help Google search crawlers understand the content and data on a page. For example, if your page contains a recipe, an ingredient list would be an ideal type of content to feature in a structured data format.

Address information, like this example from Google, is another type of data perfect for a structured data format:

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "Organization",

"url": "http://www.example.com",

"name": "Unlimited Ball Bearings Corp.",

"contactPoint": {

"@type": "ContactPoint",

"telephone": "+1-401-555-1212",

"contactType": "Customer service"

}

}

</script>

These structured data can then present themselves on the SERPs in the form of a rich snippet, which gives your SERP listing a visual appeal.

How to Fix It:

As you roll-out new content, identify opportunities to include structured data in the page and coordinate the process between content creators and your SEO team. Better use of structured data may help improve CTR and possibly improve rank position in the SERP.

Once you implement structured data, review your GSC report regularly to make sure that Google is not reporting any issue with your structured data markup.

Hot Tip: Use Schema Builder to build, test, and deploy structured data with a simple point-and-click interface.

13. Mobile Device Optimization

In December 2018, Google announced mobile-first indexing represented more than half of the websites appearing in search results. Google would have sent you an email when (or if) your site was transitioned.

If you’re not sure if your site has undergone the transition, you can also use Google URL Inspection Tool.

Whether Google has transitioned you to mobile-first indexing yet or not, you need to guarantee your site is mobile friendly to ensure an exceptional mobile user experience. Anyone using responsive website design is probably in good shape.

If you run a “.m” mobile site, you need to make sure you have the right implementation on your m-dot site so you don’t lose your search visibility in a mobile-first world.

How to Fix It:

As your mobile site will be the one indexed, you’ll need to do the following for all “.m” web pages:

-

Guarantee the appropriate and correct hreflang code and links.

-

Update all meta data on your mobile site. Meta descriptions should be equivalent on both mobile and desktop sites.

-

Add structured data to your mobile pages and make sure the URLs are update to mobile URLs.

14. Missing or Non-Optimized Meta Descriptions

Meta descriptions are those short, up to 160-character content blurbs, that describe what the web page is about. These little snippets help the search engines index your page, and a well-written meta description can stimulate audience interest in the page.

It’s a simple SEO feature, but a lot of pages miss this important content. You might not see this content on your page, but it's an important feature that helps the user know if they want to click on your result or not after they make their query.

Like your page content, meta descriptions should be optimized to match what the user will read on the page, so try to include relevant keywords in the copy.

How to Fix It:

There are a couple of ways to address this issue:

-

For pages missing meta descriptions: run an SEO site audit to find all pages that are missing meta descriptions. Determine the value of the page and prioritize accordingly.

-

For pages with meta descriptions: evaluate pages based on performance and value to the organization. An audit can identify any pages with meta description errors. High-value pages that are almost ranking where you want should be optimized first. Any page that undergoes an edit, update, or change should also have the meta description updated at the time of the change, too. It's important to make sure that meta descriptions are unique to a page.

15. Users Sent to Pages with Wrong Language

In 2011, Google introduced the hreflang tag for brands engaged in global SEO to improve user experience. Hreflang tags signal to Google the correct web page to serve to a user based on language or location of search. It’s also called rel="alternate" hreflang="x".

The code looks like this:

<link rel="alternate" href="http://example.com" hreflang="en-us" />

Hreflang is one of several international SEO best practices including hosting sites on local IPs and connecting with local search engines. The benefits of serving locally-customized content to users in their native language, however, really cannot be understated.

Using hreflang tags requires a fair amount of detail work to ensure all pages have the appropriate code and links with errors not being uncommon.

How to Fix It:

Google provides a free International Targeting Tool, and there are a variety of third-party tools that digital marketers can use as well. For instance, with our site audits, you can run an in-depth hreflang audit and verify your implementation with cross-checks of referenced URLs.

seoClarity's hreflang audit capability displays the count of hreflang tags found and lists the errors with specific tags.

Fixing hreflang errors effectively involves two steps:

-

Guaranteeing the code is correct. A tool like Aleyda Solis’ hreflang Tags Generator Tool can simplify the effort.

-

When updating a page or creating a redirect, update the code on all pages that refer/link to it.

Key Takeaway

Investigating the top technical issues – and their respective solutions – in this blog post is the best way to quickly improve your SERP visibility, and it can have a extremely positive impact on the searcher's overall experience of your site.

Editor's Note: This post was originally published in October 2017 and has been updated for accuracy and comprehensiveness.