You’ve optimized your content, built your topical authority, and polished your user experience. But does any of that matter if the AI search bots see a blank page?

Here is the harsh reality of modern search: AI is likely missing your content.

While Google’s bots have evolved to handle complex rendering, the new wave of AI crawlers (SearchGPT, Perplexity, and others) are operating differently. They are hitting a technical wall that most marketing teams haven't accounted for: Client-Side Rendering (CSR).

If your site relies on JavaScript to load content, you are facing a massive visibility gap. In this webinar, we break down why this happens, why it’s straining your infrastructure, and how to fix it without rebuilding your tech stack.

Key Takeaways

- The Problem: AI bots cannot see JavaScript. This makes your content inaccessible to them, translating directly to visibility gaps and a loss of share of voice.

- The Complication: Bot traffic is increasing fast, putting a real strain on infrastructure. This is no longer just an SEO problem—it is an engineering and performance problem.

- The Fix: Bot Optimizer delivers full content to crawlers with no front-end changes needed and no heavy engineering resources required.

If your site is rendered client-side with JavaScript, you are currently invisible to AI search. Bot Optimizer is the fastest way to turn the lights back on.

The Technical Barrier: Why Client-Side JavaScript Blocks AI Crawlers

Let’s step back. In the early days, websites were generated server-side. Bots loved this, everything was visible in the source code immediately.

But the web evolved. To deliver slick, app-like experiences (filters, micro-interactions, dynamic loading), we shifted to sophisticated Client-Side Rendering using frameworks like React, Angular, and Vue.

Here is the problem: Google eventually learned to render JavaScript (mostly). AI bots have not.

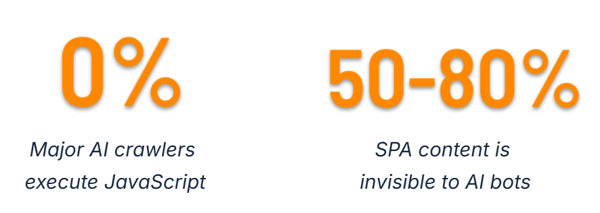

The Reality Check: No major AI crawler today is efficiently executing JavaScript at scale.

When an AI bot hits a Single Page Application (SPA), it doesn't wait for your browser to assemble the page. It grabs the initial HTML response. For a CSR site, that response is often just a shell a header, a footer, and empty <div> tags where your content should be.

User View vs. Bot View

The difference is night and day.

- What Users See: Rich, interactive content, dynamic updates, beautiful UI / UX, and full site functionality.

- What AI Bots See: Empty pages, partial content, and loading spinners.

If the bot can't see it, it can't understand it. If it can't understand it, you are excluded from the AI-generated answer.

The Cost of Invisibility: 50–80% Data Loss

This isn't a minor crawling error. It is a fundamental retrieval failure.

Our internal data at seoClarity shows that on modern single-page apps relying on client-side rendering, 50% to 80% of the meaningful content fails to appear to AI bots.

The "Invisible" Tax

When your content doesn't render, you trigger a domino effect of SEO failure:

- Zero Entities: Bots cannot extract products, prices, or context.

- No Indexing: Without text, there is nothing to store in the LLM's vector database.

- Loss of Citations: You lose brand mentions and citations in AI answers (the new ranking currency).

The Infrastructure Strain

It gets worse. AI crawling behavior is currently volatile. Unlike Googlebot’s predictable patterns, new AI agents often crawl with unpredictable intensity.

When these bots hit your site, they trigger complex scripts that try (and fail) to load, causing massive traffic spikes. You are paying for server load to serve content that the bot isn't even seeing. It is an engineering headache and an SEO nightmare.

Why Engineering Can't "Just Fix It"

If this is such a critical visibility crisis, why aren't tech teams rushing to deploy a fix?

The reality is that even when teams know about this problem, they often cannot fix it easily. Rewriting a massive React application to be fully server-side rendered is a monumental task.

- User Experience (UX): Modern websites rely on frameworks like React, Angular, and Vue to deliver micro-interactions and dynamic content. You cannot simply replace these without degrading the experience.

- Resource Intensity: Asking for dev resources to refactor a site for Server-Side Rendering (SSR) is a massive request. It involves substantial cost and effort.

- Timing: Websites are living organisms that take years to build. Aligning multiple teams to rebuild the architecture isn't possible in the short time frame needed to capture AI search visibility now.

The business needs a solution that bridges the gap between the Modern Web (JS frameworks) and the AI Reality (HTML-only requirements).

The Solution: Bot Optimizer

Businesses cannot wait years to fix this. They need AI search visibility today.

This is exactly why we built Bot Optimizer.

We know modern sites rely on JavaScript frameworks that are fantastic for users. But since crawlers don't experience the site like users do, Bot Optimizer acts as a bridge.

How Bot Optimizer Works

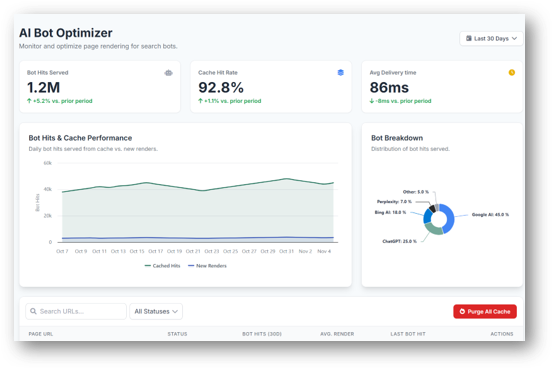

Bot Optimizer sits between your site and the crawler. It is a performance optimization solution that delivers a fully rendered HTML version of the page instantly, without requiring any changes to your front-end code.

- Full Rendering: It renders the page in full and caches the HTML.

- Selective Serving: It identifies approved bots and serves them the clean HTML version.

- Configuration: You can pick and choose which pages to include/exclude and define cache refresh rates based on how often your content changes.

Automated Validation

Crucially, Bot Optimizer includes automated validation. If a page isn't fully rendered, meaning key components like the Title, H1, or body content didn't load, we won't cache it.

This gives SEO and Tech teams confidence that bots will never receive incomplete content. You get a clean, compliant result with minimal dependency on internal dev teams.

Conclusion

The shift to AI search is not just a content challenge; it is a technical one.

If you are building a modern website, you are likely using JavaScript. If you are using JavaScript, you are hiding from the very engines trying to rank you.

You don't need to rebuild your website to fix this. You just need to translate your experience into a language the bots understand. Bot Optimizer is that translator.

If you aren't sure if your site renders all content for AI engines, we can check for you.

FAQs

Why can Google see my JavaScript content, but AI bots can’t?

Googlebot can render many JavaScript-heavy pages, which is why modern sites can still perform in traditional search even when they’re heavily CSR. Many AI crawlers, however, don’t render JavaScript, so they can’t load content that only appears after scripts run.

How much content can AI bots miss on JavaScript-heavy sites?

On modern single-page applications (SPAs), it’s common for 50–80% of the content to fail to appear to AI search bots when that content depends on client-side rendering.

What happens when AI bots can’t see my content?

If an AI crawler can’t see your content, it can’t reliably read it, extract meaning, or understand context. That means your content is less likely to be used in AI-generated answers and you have fewer chances of being mentioned or cited.

Why aren’t engineering teams fixing this visibility issue already?

Because it can be expensive and slow to shift an established site from CSR to full SSR. Teams often need modern UX (React + dynamic interactions), and a migration can involve multiple teams and years of accumulated architecture decisions. Marketing teams can’t wait months to regain visibility.

Will AI crawlers eventually be able to render JavaScript?

Maybe, but you shouldn’t plan your visibility strategy around it. Even traditional search engines have struggled to render JavaScript reliably at scale for years. Bing’s own guidance for JavaScript-heavy websites has been to use dynamic rendering to ensure content can be crawled and indexed consistently.

Bottom line: AI crawlers may improve over time, but today many still don’t execute JavaScript, so you need a solution that makes your core content accessible now.

Optional “FAQ add-on” sentence (helpful + safe): Even when a crawler can render JavaScript, rendering is often slower, more resource-intensive, and less predictable than receiving complete HTML.

Comments

Currently, there are no comments. Be the first to post one!